Key Takeaways

- An internal grant allowed two instructors at the University of Minnesota to address design challenges in their Medieval Cities of Europe course.

- Of particular concern were poor student attendance of in-class films and lack of attention by those who did attend, which an initial pilot targeted using Twitter and Wordle.

- These cloud-based student response systems encouraged productive intellectual discourse among students on Twitter and helped the instructors identify and address misperceptions apparent in word clouds.

- Unable to find a closed, authenticated, anonymous environment, the university developed a cloud-based SRS called ChimeIn that powered the same pedagogical strategies as the Twitter/Wordle pilot in a user-friendly, scalable experience, while also allowing for more classic "clicker-style" uses for multiple-choice questions.

Kay Reyerson and her graduate assistant Kevin Mummey applied for and received an internally funded instructional grant to address design challenges in their course Medieval Cities of Europe. One of the grant's goals was to encourage experimentation that might lead to scalable, sustainable, transformative, technology-enhanced pedagogies that could better serve the entire university community and perhaps the greater education community as well.

Among the things the two instructors hoped to address with an initial pilot were poor attendance of in-class films and lack of attention to the films among those who did attend. Historically, the instructors had shown the films and then tried to engage students in a discussion about the themes. In a class of between 75 and 80 undergraduates, however, they often heard from only one or two learners and had little information about the thoughts of the others. The instructors considered the films useful as source information, and they wanted to motivate students not only to attend the lectures but also to approach and critique the films with intellectual curiosity in class and perhaps even among themselves.

Pilot: Twitter and Wordle Go Medieval

To address these challenges, the course design team considered a number of technology-enhanced pedagogies. Our student response system, or clickers, didn't allow for the free-form text input that the instructors envisioned, given the limited nature of the multiple-choice response. We wanted a means by which students could enter their own thoughts and potentially ask and answer one another's questions in a feed, but that could also be aggregated in such a way as to identify themes and common ideas to guide the post-film discussion.

The metaphor that began to emerge organically in our discussion resembled Twitter, and we began to talk about students tweeting their thoughts and conversing with one another online in a community of scholarship while watching the films during class. Twitter is a micro-blogging environment in which users write short-form posts of 140 or fewer characters. It has several key features and affordances that were attractive to us:

- First, setting up a Twitter account is free.

- Second, it works on a cloud-computing metaphor, which meant that students could access it from any web- or text-enabled device — likely one already in their pockets or backpacks.

- Third, the short-form response would encourage an economy of thought, rather than lengthy rambles.

- Finally, the persistence of the tweets, and the Web 2.0 capabilities of RSS, made it a great candidate for allowing us to mine the data in post-class discussion and word clouds.

Cloud computing, as we use it here, refers to applications and data that exist on the Internet and are generally accessible from a number of devices. The end users (in this case, faculty and students) don't need to know where the data are stored — they simply have to remember the access point to retrieve the data. Imagine if you were to tweet using a traditional e-mail mechanism. Students would write their 140 characters in a word processor, save the file, and then e-mail the instructor with the attachment. In a cloud-based metaphor, learners simply log on to an application (in this case, Twitter) on the Internet and share their thoughts, which are saved "in the cloud." The application knows where to find the data and shows users what they are credentialed to see.

An appreciable and emerging literature addresses the use of Twitter as an instructional tool (see, for example, work by Joanna Dunlap and Patrick Lowenthal1), but the results have been far from overwhelmingly positive.2 Our approach to teaching with Twitter leverages what has become known as the backchannel — the ongoing, co-constructed, meta-content discussion that can accompany live demonstrations of nearly any type (lectures, conference talks, performances, or, in our case, communal vieweing of movies). Several interesting projects have attempted to leverage the backchannel pedagogically in recent years.3

Twitter might work well as an instructional tool to let students backchannel their thoughts on the films, but we wanted to aggregate the free-text responses in some meaningful way. Again, somewhat organically, we gravitated to the idea of using word clouds — visual representations of text that emphasize and deemphasize words, excluding articles and prepositions, by showing their relative size given the frequency with which they appear in the text. For example, if the word "Medieval" appeared four times in a paragraph of text and "city" only once, "Medieval" would appear four times the size of "city" in the resulting word cloud. The literature offers little around using word clouds in instruction, but some precedent does exist for this type of approach.4

We decided to proceed with the pilot using Twitter with Wordle.com for aggregation. To help focus student responses and reduce cognitive load,5 we provided learners with three prompts to answer in a free-text format throughout the movie. We designed the prompts to be relatively open-ended to allow learners enough latitude to explore, but focused enough to encourage thoughtful analysis on specific areas important to the instructors, as follows:

- Tweet thoughts on the source materials used to make claims in this film.

- Tweet thoughts on the main historical themes or arguments being made in the film.

- Tweet general thoughts or reactions to the film.

To implement our strategy, we surveyed the students to determine if they had portable devices to tweet with. Astonishingly, 69 out of 77 students had such a device that they were willing to use for this purpose. Our existing academic technology infrastructure had several computing devices that we could loan out to the class on movie days, making it trivial to provide devices to those who did not have one. Students rarely borrowed one of our devices on movie days, however; if they didn't have a device of their own, or if they forgot theirs, they simply negotiated with their neighbors to borrow a device when they wanted to tweet.

Once we determined that we had the needed device infrastructure, we set about testing the wireless infrastructure, which we deemed sufficient for our needs. Unwilling to make students create their own Twitter accounts (we tend to avoid forcing students to click an "I Agree" button in cloud-based tools if at all possible), we set up 10 course-based Twitter accounts for use in the class exercises. We "followed" our 10 accounts to one another and then made the usernames and passwords available on our Moodle site, so about 7–10 students could sign into each Twitter account.6 To receive credit for their tweets, the students had to begin their short responses with their university ID, which we would use to give them participation credit. Because we had created a small community of learning by following all of the course users to one another, the students could see an aggregated feed of all of their peers' thoughts, regardless of which account they signed in to. Finally, we set the class Twitter accounts so that only approved people could follow us, and we disallowed people finding us through Twitter search to avoid unwanted Twitter crowdsourcing of our course discussion and to encourage free discussion among learners.

Not wanting to make any assumptions about Net Gen7 students' familiarity with Twitter and realizing that we have a diverse student population, many of whom are not Net Geners, we created some online training for the class on how to access and post to the Twitter accounts. We also conducted a brief trial run the week before our first movie to work out any bugs in the system. The trial went shockingly smoothly — students were able to log on easily and post their test tweets.

Pilot Evaluation

In compliance with the obligations of the grant, we conducted a fairly extensive evaluation of the pilot, including in-class observations, analysis of the Twitter feed itself, and a student survey. We'll discuss these results in some detail.

A first positive indicator that our approach might be bearing fruit was a simple attendance metric. Of the enrollment of 77 students, on the four days that we conducted Twitter projects we had attendance of 61 (79 percent), 59 (77 percent), 61 (79 percent), and 61 (79 percent). While we unfortunately do not have benchmark numbers from previous semesters, experience tells us that these are strong numbers, particularly for a day when the instructor is not intending to lecture or give a quiz; "movie days" have, in our experience, notoriously low attendance. The attendance numbers are especially strong given the fact that we were in Minnesota in mid-winter, mid-way through the semester, when winter malaise and semester ennui generally take a toll on classroom attendance.

The in-class observers (undergraduate students not enrolled in the class, hired and trained for this purpose as part of the grant) at the back of the room saw students using their laptops and mobile devices stay on Twitter and comment on the movie. They noted a surprising lack of attention drift, where students wander off to check e-mail or Facebook, text, or surf the web. Despite the obvious limitations to this type of observation, we believe the improved focus to be another generally positive indicator, although we don't rule out the possibility of some element of a Hawthorne Effect.8

Technology Fail

An unexpected technology fail on a movie day led to an inability to tweet during the second of two films. We encouraged students to "tweet analog" instead, taking notes on their thoughts that they could post when the issue with Twitter had been fixed. We observed that during the first film, students seemed engaged and tweeted their thoughts. During the second film, however, many students reverted: a few seemed authentically engaged in watching the movie, but most began chatting with neighbors, surfing the web, or sleeping. Some simply left class. Because of the confusion that the technology fail caused, no students were penalized for nonparticipation in this second analog tweeting session.

Our analysis of the Twitter feed itself confirmed our in-class observations: students seemed engaged in an ongoing, persistent way with the films. Table 1 outlines data gathered from tweets for four of the five in-class movies. (The second movie experienced technical fail and was removed from the analysis, as students were unable to tweet.) The metrics are derived using N values of the students who attended class for each movie showing, rather than from the overall course enrollment of 77 students.

Table 1. Twitter Feed Indicators of Persistence

| Relevant Tweets | Participating Students | Mean Tweets per Student | Standard Deviation | Number of Students Tweeting Once | Max Tweets from a Single Student | |

| Movie 1: The Middle Ages: The Medieval Plough; run-time 00:14:09 | 163 | 61 | 2.7 | 1.4 | 12 | 7 |

| Movie 2: Tools in Medieval Life run-time: 00:29:41 | No data; technology fail | No data; technology fail | No data; technology fail | No data; technology fail | No data; technology fail | No data; technology fail |

| Movie 3: The Making of a City: Medieval London, 1066–1500; run-time 00:21:01 | 157 | 59 | 2.7 | 1.7 | 17 | 9 |

| Movie 4: Siena: Chronicles of a Medieval Commune; run-time 00:29:02 | 207 | 61 | 3.4 | 2.5 | 15 | 11 |

| Movie 5: Cathedral; run-time 00:57:37 | 172 | 61 | 3.2 | 2.5 | 11 | 9 |

Individual learners could have chosen to simply tweet their single required response and then disengage for the remainder of the film. Instead, many chose to engage multiple times during each movie.

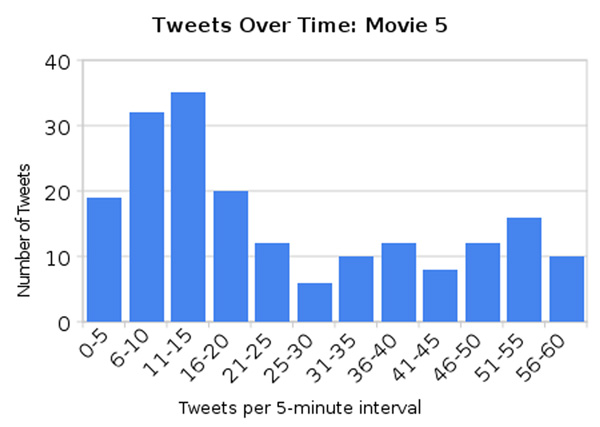

Additionally, a basic analysis of the number of tweets over time shows that, while the most Twitter activity occurred in the first 15–20 minutes of each film, the class in aggregate continued an ongoing discourse beyond this initial time period. Figure 1 shows the temporal distribution of tweets for our final movie. This film was the longest by far, with a run-time of 57 minutes and 37 seconds; intuitively, one would expect persistence to suffer the most during this film. While the clear focus of tweeting activity occurred in the first 20 minutes of the film, a steady stream of comments and thoughts emerged throughout.

Figure 1. Tweets over Five-Minute Intervals for Movie 5: Cathedrals

A less rigorous quick scan of the remaining Twitter feeds from the other three films indicated similar trends: a flurry of activity at the beginning of the movie, followed by a steady stream of tweets throughout.

A number of tweets went uncoded in our analysis. Of the several hundred tweets from across the four successful Twitter activities, only 32 tweets total were unsourced to a student's ID (4, 8, 7, and 13 successively in movies 1, 3, 4, and 5); these were excluded from the analysis.

We also excluded from analysis a series of tweets unrelated to the content of the films, which fell into three broad categories: administrative, personal, and adversarial.

- Administrative tweets, totaling 5 overall (1, 3, 1, and 0 for the four successive, coded Twitter activities) focused on challenges with the process or requests of the instructional staff. For example, students might ask the TA to turn the volume on the movie up, comment that they had trouble remembering the Twitter username and password, or ask if anyone else found the Twitter exercise distracting from the movie.

- Despite the instructional staff's requirement that the exercise not be used in this way, a number of tweets of a personal or playful nature were posted. These 15 tweets (2, 6, 6, and 1 for each of the four coded exercises, successively) came in a variety of forms, but included requests to "go get tacos after class," playful comments to the instructional staff ("Hey, Jude!"), and, in one case, an obvious attempt to get the word "beef," which was repeated over and over in the tweet, to show up in the word cloud that would be shown to the class after the movie.

- Finally, and perhaps of more serious concern, there were a small number of tweets that we deemed to be adversarial — directed at other members of the learning community, unsourced, and framed negatively. For example, one tweet suggested that a particularly vocal member of the class "shut up." This type of unfortunate, unsourced comment will need to be addressed in future versions of the pedagogy.

We saw persistent activity among the students on Twitter, but we also wanted to determine if that activity was thoughtful. This distinction has been articulated as two dimensions of motivation: persistence and mental effort.9 Mental effort is particularly challenging to measure, as it is a multifaceted construct affected by a number of intrinsic and extrinsic factors, including prior experience, cognitive load, and physiological responses to psychological stimuli10 such as stressors related to feelings of efficacy,11 attributions for success,12 and a host of other factors.13 Not having the resources to conduct more rigorous evaluations of effort in experimental design, we decided to use proxy indicators based loosely on Salomon's amount of invested mental effort (AIME) model.14 To this end, we conducted a content-based evaluation of the students' tweets, filtering out what we considered "automated responses" and focusing instead on student demonstrations of mastery that articulated at least minimally cogent, lucid thoughts or questions. In addition, we conducted a student survey, asking students explicitly about their degree of mental effort, another approach which has methodological precedent.15

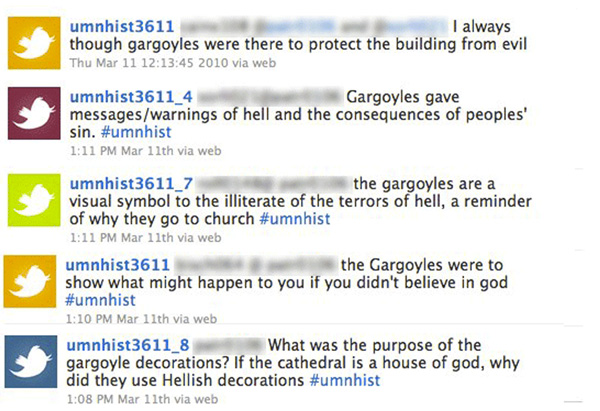

We found that students' thoughts and reflections in Twitter were generally astute. Students engaged in productive intellectual discourse, making reflections or asking questions germane to the instructor-provided prompts. On occasion, we even found that students engaged in peer-to-peer discourse, offering ideas or trying to answer one another's questions. Figure 2 shows a sample of a conversation that occurred during the final film on European cathedrals about the role of gargoyles in cathedral architecture. Note that this conversation has been edited from the original Twitter feed to juxtapose the tweets relevant to this conversation. In the usual style for blogging and micro-blogging, the conversation should be read from bottom to top to see the original question and the chain of responses. Identifying data has been blurred.

Figure 2. Twitter Conversation about Gargoyles (Bottom to Top)

We conducted a detailed analysis of the Twitter feed for the first film, The Middle Ages: The Heavy Plough. For this analysis, we included all 174 tweets, including those that were unsourced or unrelated to the course content. We coded each on a simple three-point scale, determined by the TA for the course: 1 for good, thoughtful insight; 2 for relevant but not particularly insightful; and 3 for unrelated or completely trivial. We coded 64 percent (112 of 174) in category 1 and 87 percent (152 out of 174) as combined category 1 and 2. Again, we found these results heartening.

We conducted a more general read of the remaining three feeds and found similar results. Most student responses throughout the films were germane and generally thoughtful. We did not find an abundance of what we considered trivial observations or automated responses, although some certainly were less interesting than others. A few quotations from our feeds follow:

"...as a farmgirl, I find it interesting how knowledgeable they were, and also how some of the main ideas still carry through today"

"only mentioned gutenburg for printing press even though China had it centuries before (and they mentioned China for other things)"

"What role did the king/royalty have in the construction of cathedrals, especially ones who had divine right to govern?"

"the plague had nearly the same effect on Siena and London because the plague could not be purged no matter what government"

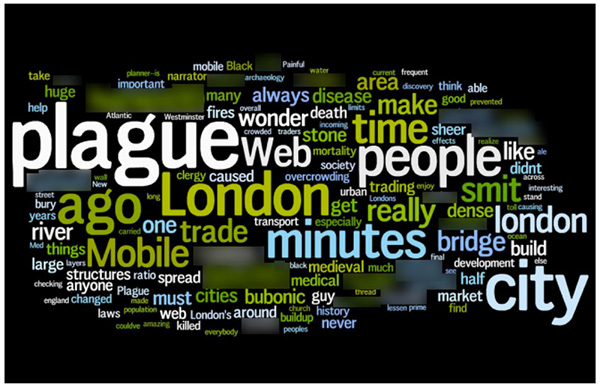

As we mentioned earlier, part of our model included using an aggregated tag cloud to identify themes. The idea was to use these tag clouds in a way similar to how clickers can be used to aggregate responses to multiple-choice questions.

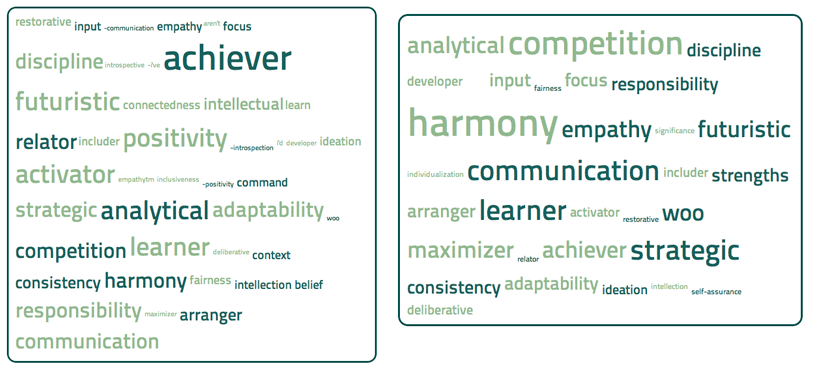

Figure 3 represents the word cloud for the third movie, The Making of a City: Medieval London, 1066–1500, with identifying data blurred. Notice that the most tweeted word was "plague." The instructors considered issues concerning the plague important, but not really central, to the theme of the movie. This provided a moment of reflection for the class, where the instructors articulated clearly to the class the role that the plague played — and didn't play — in the formation of medieval London. It also guided the post-viewing discussion.

Figure 3. Word Cloud for the "London" Movie

Beyond leveraging the word clouds to guide discussion, a simple attendance/participation grade was provided to learners who participated in a simple check (tweets not considered thoughtful by the instructors), check plus (tweets considered thoughtful by the instructors), check minus (did not tweet) system.

At the end of the semester, we conducted a student survey that asked specific questions about the use of Twitter in the course. Twenty-nine out of the 75 students enrolled in the course, or approximately 40 percent of the class, responded to each question in our survey:

- Only 13 of the 29 respondents (45 percent) agreed or strongly agreed that they paid closer attention because of the Twitter exercises; this means, of course, that the other 55 percent indicated that they disagreed or strongly disagreed with that statement.

- Only six students (21 percent) agreed or strongly agreed that they worked harder in the course because of the Twitter exercise.

- Twelve of the 29 respondents (41 percent) indicated that the Twitter exercise helped them learn, leaving nearly 60 percent that disagreed or strongly disagreed with this statement.

- Eleven respondents (38 percent) suggested that the Twitter exercise enhanced interaction with the instructor, TAs, or other instructional staff.

- Eleven students (38 percent) agreed or strongly agreed that they thought more about the course material because of the Twitter exercise than they would have if it had not been used.

Student comments included:

"I like the idea of the Twitter exercise overall, but I feel like it distracted from the movie."

"Twitter was distracting and disorganized."

"I thought the Twitter exercise made me focus more on Twitter than on what I needed to learn."

"Twitter was fun because of the jumping point for discussion it provided after the film."

Certainly, the most charitable interpretation of these data would be that the results are mixed in terms of student perception of efficacy. A clear and consistent, if slight, majority of the students did not seem to see the learning or motivational benefits of the Twitter exercise that the instructional and evaluation teams observed. The student perceptions of efficacy certainly did not reflect our own sense that the project met with our learning goals and seemed to transform the culture of the course during the in-class films to engage students in thinking constructively about the movies shown. We'll discuss possible interpretations of these results in the Discussion section.

ChimeIn: An Authenicated, Scalable Solution

While the pilot of our pedagogical strategy yielded, to our minds, generally positive results, it was a deeply manual process that required far too much human resource support to scale. Too, we saw an opportunity to use this general approach to address other types of issues that more traditional clicker technologies had presented over time. We've heard many instructors complain over the years that loading an additional $30 or more onto already financially burdened students is a bridge too far, particularly when most students already have some form of computing device that they bring with them to class. Why can't students use their own devices, such as laptops, smartphones, or cell phones, to click in their responses? Clicker companies have begun offering cloud-based clicker tools that require students to purchase a license to participate, but of course this doesn't address the issue of loading additional costs onto students. We also believed that the commercial offerings have remained relatively uninspirnig, not fully realizing what we see as the potenitial of student response technology, including allowing more sophisticated forms of input and more interesting visualizations of students' collective work.

In discussions with several key faculty members about the pilot project, many expressed concerns about the "open" nature of Twitter and moving students' work to the cloud. One instructor, for instance, who wanted to (and has since begun) using the tool in a course in the Gender, Women, and Sexuality Studies department indicated that learners in her class might be asked to respond to questions about rape, gender, and sexuality that she would feel much better about if they were in a closed, authenticated, and still anonymous environment.

Not finding anything that specifically met these needs in the commercial or open-source market, we set our sights on developing a cloud-based tool that would power the same pedagogical strategies as our Twitter/Wordle pilot, but in a more tailored, user-friendly, scalable experience. ChimeIn was the result.

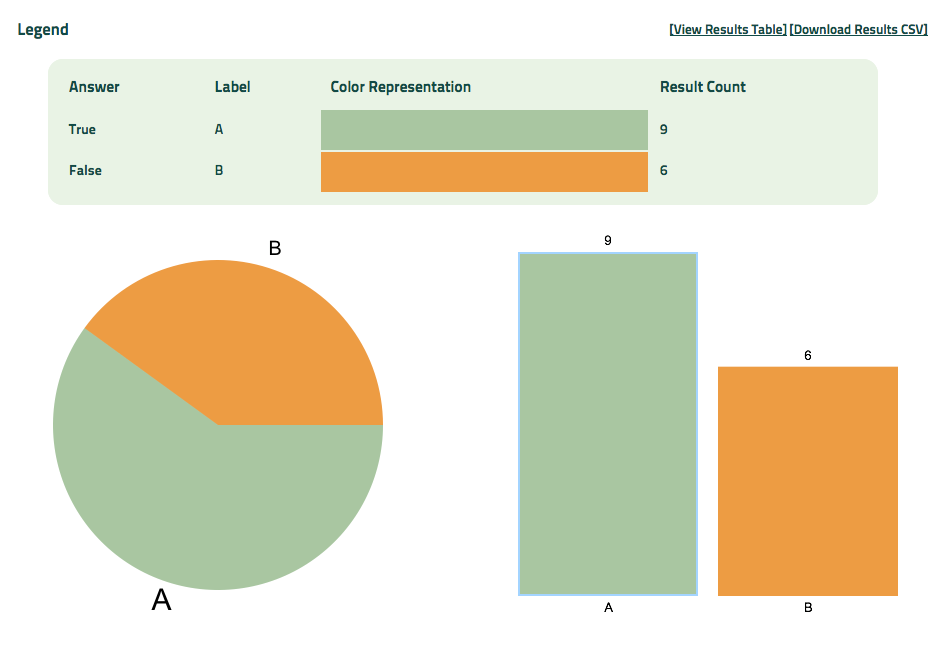

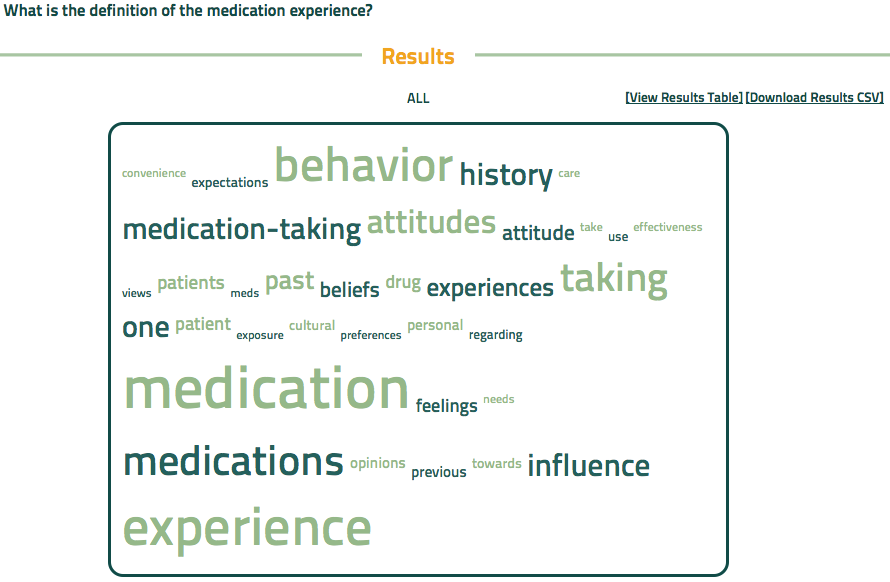

ChimeIn is a cloud-based student response system that (currently) allows instructors to ask students three types of question: true/false, multiple choice, and free text. Students "chime in" with their responses to the questions in a number of ways: via a web-based interface, via a smartphone interface, or by SMS/texting their responses. (As devices change, tweaks to the input interface and protocols should be trivial.)The system is relatively device-neutral — our goal was to make chiming as ubiquitously accessible as tweeting or updating a Facebook status. Responses from true/false and multiple choice questions appear as a bar graph and as a pie chart (see Figure 4). Responses to text-based questions appear as a word cloud (see Figure 5, which shows student answers to the question "What is the definition of the medication experience?" in a course in the College of Pharmacy). And, much like a Twitter feed, the chronological, dynamically updating archive of text-based chimes can be monitored (see Figure 6, which shows the chronological archive of chimes for Reyerson's history course on the Crusades with identifying data blurred). Questions may be anonymous or tied to a particular student for grading purposes. Instructors can also download the source data collected by ChimeIn in a CSV format, allowing them to perform other types of analysis or integrate responses with a learning management system (LMS) gradebook.

Figure 4. Response to a True/False Question (N = 15)

Figure 5. Word Cloud Generated from Student Responses

Included with permission of Drs. Rod Carter, Keri Naglosky, and Don Uden, course instructors.

Figure 6. Chronological Archive of Chimes

ChimeIn took one full-time programmer less than two weeks to build, with the help of one undergraduate programmer and one undergraduate interface designer. It is housed on an existing server and requires very little maintenance. We estimate that the initial development costs were under $5,000. It is fully integrated with our PeopleSoft data and has an interface that allows instructors to create courses by the university course ID number that will auto-populate the students signed up in their course rosters. Students and additional instructors can also be added manually. Public ChimeIn "courses" can also be created to allow instructors or administrative units at the college to use the tool in noncurricular ways. Technically, the application was developed using a combination of PHP, jQuery, MySQL, jQTouch, and Google Voice.

We added several additional features to enhance the instructional experience:

- All question types can be set to update dynamically as students are chiming. This allows the bar graph and pie chart or word cloud to update as new responses come in, changing relative size and shape dynamically to show the movement of learners' collective chiming.

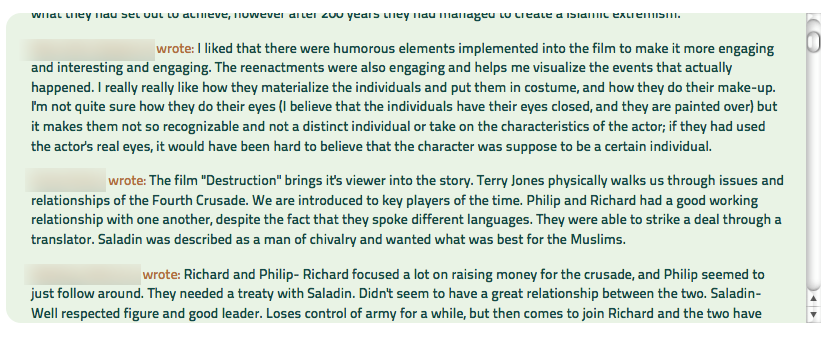

- In addition to dynamically generating the word cloud for text-based responses, individual words can be selected from the cloud, and the text-feed will auto-sort to include only those chimes with the selected word, which is highlighted. This feature facilitates identifying themes from chimes in real time.

- Finally, we added a "hot swap" feature that allows learners to click a single button within the ChimeIn interface that logs them out and returns to the login screen, so devices can be easily shared among learners even in authenticated mode with a minimum of disruption.

For an example of the first two features, see the demo at http://z.umn.edu/dynamicchimes. Figure 7 shows a word cloud generated from student responses to a film in Reyerson's course on the Crusades. The word "blame" is selected in the word cloud (top) and sorted/highlighted in the free-text response feed (bottom), with identifying data blurred.

Figure 7. "Blame" Highlighted in Word Cloud and Free-Text Response Feed

Note that ChimeIn was intended to power not only the specific pedagogical strategy from our pilot but also to provide a range of other features to instructors that would allow additional pedagogies to emerge. Some of those might simply be allowing students to use their personal mobile devices to answer multiple-choice clicker-style questions. Some might be free-text clicker-type questions. Others might be more dialogic, allowing a backchannel with a cloud-based representation of the most commonly chimed thoughts, similar to our initial pilot.

Initial response to ChimeIn has been overwhelming. As of this writing, just over one semester after launch:

- The system has 168 unique instructors who have created courses.

- Over 300 courses have been created in ChimeIn.

- About 100 courses have five or more questions in them — which we believe implies that they are not simply "test" courses, but actually have people using them for repeated polling.

- Over 1,400 questions have been created, and more than 12,000 individual responses have been chimed.

- More than 700 students have "bound" their cell phone numbers to ChimeIn to allow them to SMS their responses.

An evaluation of the size and scale of our Twitter and Wordle pilot is not funded for ongoing uses of ChimeIn, nor do we believe it is feasible given the tool's popularity. However, some short examples demonstrate how it is being used and its versatility.

Use Case 1: Extending the Pilot

Reyerson uses ChimeIn much as she used Twitter and Wordle in her pilot — to allow learners to archive their thoughts and engage one another during in-class films, and then to guide discussion after the film. She has used the ability to highlight individual words in the word cloud to help focus the discussion. The authentication allows her to easily identify individual student responses and to ask students to follow up on their chimed thoughts. Given ChimeIn's ease of use, she can manage the entire process on her own, without additional instructional design or technical help. Students in the class use the hot swap feature to share devices to chime their thoughts during the films.

Use Case 2: Leadership in the University

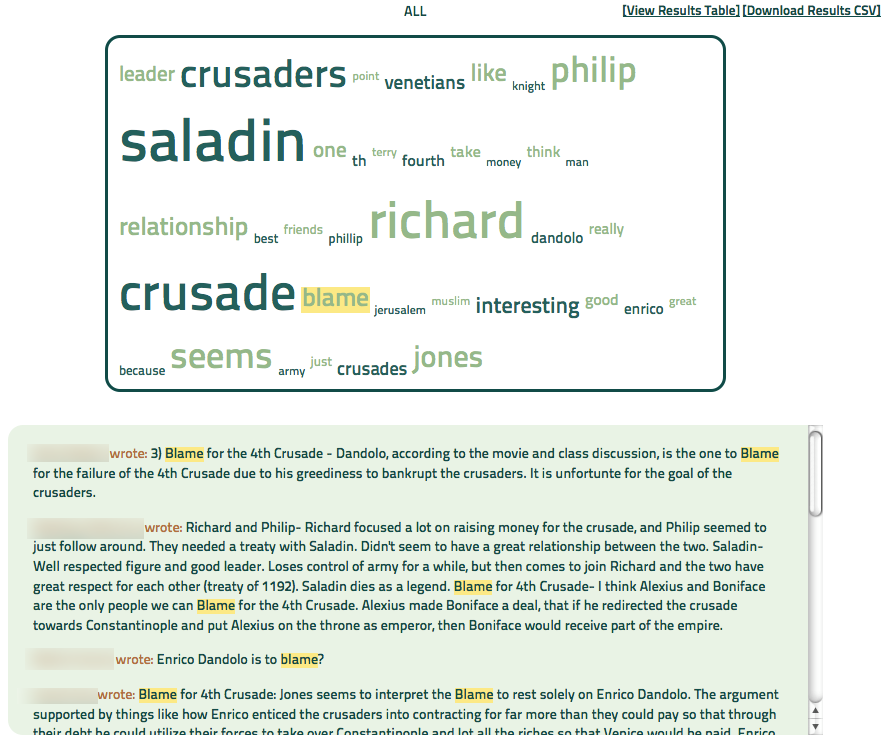

Jude Higdon and Colin McFadden teach the online course Leadership in the University, in which learners read the book Strengths-Based Leadership16 and examine the 34 themes from the StrengthsFinder assessment. The instructors asked them to predict their five strongest themes, which students chimed. The students then took the StrengthsFinder assessment and chimed the five themes identified by that tool to a new ChimeIn post. Small groups discussed the similarities and differences between their predicted themes and those identified by the StrengthsFinder tool, using the opportunity to explore the value of these types of analytical tools for traits-based leadership models. The students and instructors also found the aggregate "strengths clouds" of the class interesting (see Figure 8). The word clouds allowed them to see how the class's strengths, predicted by the students and then identified by the StrengthsFinder tool, looked for the class as a whole. In the future, we may offer a student interface for the word clouds to let students sort and find others in the class with their signature themes, or perhaps to build a "dream team" of others in the class having complementary strengths.

Figure 8. Comparison of Strengths Word Clouds, Predicted (left) and Identified by StrengthsFinder (right)

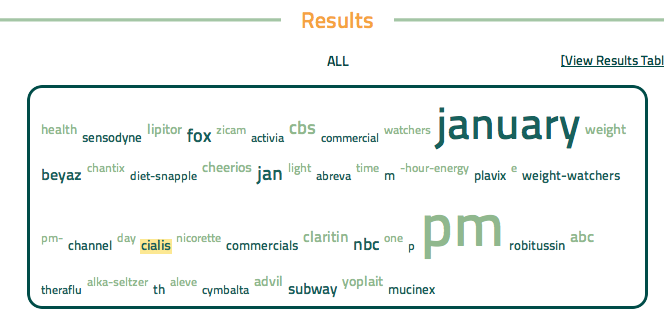

Use Case 3: Prime-Time TV Drug Commercials

In the College of Pharmacy course Pharmaceutical Care 2, Rod Carter, Keri Naglosky, and Don Uden asked students to watch several hours of prime-time television over the course of a week and post the subject of the commercials they saw to a ChimeIn post. (The questions can be left open indefinitely to collect ongoing data.) The aggregated cloud was used during class to discuss the prevalence and commercialization of drugs in mass media marketing (see Figure 9). Students and instructors used the ChimeIn sorting feature during class to look for trends and connections between and among drugs, programming, networks, and target demographics in order to better understand the marketing of commercial prescription and non-prescription drugs.

Figure 9. Word Cloud of Prime-Time Commercials

Use Case 4: Student Union Polling

Nonacademic units have also shown a strong interest in the polling technology. The Coffman Student Union has built an interface that leverages the ChimeIn API to run quick polls on their digital signage. They might pose questions such as "What band would you most like to see in Coffman this spring?" or "What would be the best use of the renovated space of the east side of the building, near the large-screen televisions?" These questions are entirely open and not tied to a specific course, so students can simply text to a phone number on the screen or navigate to a special URL to respond to the poll on their smartphone or laptop/netbook.

Next Steps for ChimeIn

The open nature of the development environment makes ChimeIn a nimble, easily updated application that can quickly move in new directions, both technologically and pedagogically. As an example, it became clear relatively early in both the pilot and the ChimeIn rollout that one challenge with free-text response is that distractor words can crowd the word cloud, obscuring the important content relationships. (See, for example, the words "January" and "pm" in Figure 9.) We have added the ability to simply right-click and exclude any word from the word cloud, allowing the cloud to redraw itself with the remaining, content-based, words.

We believe some classic clicker uses could easily be expanded as well. For example, enabling checkbox input, which allows users to select more than one option, rather than just multiple choice, which is necessarily single-answer, could be quite interesting. Additionally, we believe that allowing learners to rank-order a list might hold pedagogical promise. We are also exploring the option of allowing learners to add a related efficacy indicator to their responses, indicating how sure they are of the answer they just chimed. All of these options pose opportunities and challenges for visualizing learner responses.

To continue maturing the word cloud, we have engaged a programmer who is a graduate student to develop additional semantic text analysis for the word clouds, making them "smarter." So far, the programming has allowed for clustering of basic word variations; for example, iPad and iPads would appear as the same word in the cloud, as would farm, farmer, farming, and farmed. Emerging options include visual, color-coded queues that indicate first- and second-order related words to a selected word.

Still nascent areas for visualizing and utilizing student-generated content might offer additional opportunities for development. Following Higdon and McFadden's presentation17 at a recent conference we brainstormed with a range of individuals from other institutions of higher learning. One idea that emerged was enabling word clouds to form related concept maps, allowing learners to draw connections between and among items that they chime, as well as adding relative strength-of-connection indicators to those connections. Another discussion led to the idea of clustering individual words in the cloud into categories and subcategories to see the relative strength of those categories in the cloud. A range of other types of inputs and visualizations could emerge in this space.

We hope to release ChimeIn under an open-source license within the year. In the short term, we are happy to discuss sharing code with anyone interested. Interested individuals can contact Higdon, [email protected], or McFadden, [email protected].

Discussion

Overall, we consider the Twitter/word cloud pilot a success. From the instructor perspective, we saw greater student engagement/motivation during the movie days than we had in previous semesters without this type of pedagogy. We believe the data suggest that students were motivated to engage thoughtfully and effortfully in a protracted way with the movie content, which was our primary goal.

The results from the student survey were disappointing and incongruous with the other evaluation metrics from this project. Several possible explanations for this disconnect exist.

- Explanation 1: Students enjoy easy tasks and don't enjoy more difficult ones.

One explanation for these findings is that students simply don't enjoy working hard during class. Richard Clark suggested that students may make learning choices based on perceived efficiencies, choosing lower-load learning strategies that they will enjoy over more productive, but potentially more effortful, learning strategies that would result in greater learning outcomes.18 This explanation, of course, assumes that learners in our survey conflated the idea of task enjoyment with the constructs intended to be measured: persistent mental effort, efficacy, learner-centered instruction, and overall learning outcomes.

- Explanation 2: The Twitter project did not help students learn and may even have been deleterious to their learning.

We can't have a reasoned discussion of possible explanations for these findings without acknowledging that the project simply might not have worked — that the pedagogy did not help students learn and might, in fact, have had a distracting, deleterious effect on their learning.

We are certainly sympathetic to student complaints that they found the Twitter activity distracting. And yet — we observed more student engagement when the Twitter project was included. The difference between student and instructional staff perceptions of efficacy, it seems to us, results from different ways of framing what is compared. Students seem to be comparing a classroom situation in which learners are thoughtfully engaged in watching the movies versus one in which they are distracted from watching the movies to tweet on the film. The instructional staff, again drawing on past experience as well as the evidence provided by the Twitter fail, would frame the comparision as being between a group of students disengaged from the films (either absent from class altogether, or engaged in other activities such as sleeping, texting, or surfing the web) and a group of students engaged in watching the films and trying to make sense of their thoughts and ideas about the movies, distracted every few minutes to share those thoughts by tweeting.

- Explanation 3: Students are unfamiliar (and uncomfortable) with this culture of learning.

Another possible explanation is that students are simply unfamiliar with, uncomfortable with, and/or unconvinced that they should have to engage in this type of "culture of learning." There is an ongoing debate about the commercialization of higher education and students as "customers."19 In the context of this debate, one could argue that students as customers consider education something "owed" to them, a commodity which they have bought and paid for. Students thus might resist efforts to engage them in active learning activities. When education becomes a commodity, then learning becomes transactional, and efforts to engage students in unfamiliar models of co-constructed sensemaking might be viewed with suspicion — or even as a breach of contract.

There might be elements of truth in each of these (or other) explanations for the distance between the students' and instructional staff's perceptions of efficacy of the project . No pedagogy is perfect. Asking students to engage in any activity, by definition, pulls them away from other activities, some of which might be as or more productive for their learning than the ones the instructor has crafted. Additionally, this pilot project entered untested pedagogical waters, which can create disorganization despite the best efforts of instructional staff. Further, the somewhat opportunistic use of technology platforms not designed for these specific types of activities no doubt did feel a bit clumsy and distracting to students. Many of these issues, we believe, can be addressed in the future by refining the pedagogical process and customizing the technology, which we have now begun to do.

The Evolution to ChimeIn

ChimeIn has also proven quite successful, although still very much in its infancy and subject to some limitations. While the dialogic, peer-learning pedagogy of the original project can still be achieved, limitations on the server side in the current implemention of ChimeIn mean that we have not yet been able to allow students to view the entire feed on their personal computing devices. The instructor can project the feed at the front of the room, but we believe this will seriously limit the peer-to-peer responses that we saw in the original project on Twitter. Reyerson, who is currently using ChimeIn much as she did with Twitter and Wordle in the original pilot, has anecdotally reported as much. We hope to address this issue in the future to create a more "Twitter-like" experience for learners, where they can see the feeds on their personal devices.

That said, the tool seems to have sparked the imaginations of a wide range of users from across the university. It is being used in courses from the social sciences and humanities to the hard sciences and professional health care education. Both the faculty and administration have begun to use ChimeIn for instructional and admnistrative purposes.

Conclusions

The pedagogical and technical strategy that we have begun to employ addresses several key issues that the classic clicker approach did not — most relevantly, the cost of a single-use device and the lack of meaningful free-text input, aggregation, and analysis. We believe the approach, and the tool, show great promise and are eager to continue refining both. From the perspective of the instructional staff, the pilot demonstrated that active learning strategies must be engaged in thoughtfully, and instructors should be aware that students might resist instructional shifts that ask them to become more engaged. Similarly, technology should be invoked in a thoughtful way to minimize learner distraction; a focus on ensuring that tools and activities serve student learning must be tantamount. Finally, we belive that pedagogical strategies for leveraging free-text responses in a live classroom environment are in their infancy and will need refinement and expansion to prove efficacious for the future.

Acknowledgments

The pilot project discussed in this article was funded by a Student Technology Fees grant from the College of Liberal Arts, University of Minnesota. Our thanks to Drs. Rod Carter, Keri Naglosky, and Don Uden, and to Kurtis Scaletta, for their collaboration.

- Joanna Dunlap and Patrick Lowenthal, "Horton Hears a Tweet," EDUCAUSE Quarterly, vol. 32, no. 4 (2009), and Joanna Dunlap and Patrick Lowenthal, "Tweeting the Night Away: Using Twitter to Enhance Social Presence," Journal of Information Systems Education, vol. 20, no. 2 (Summer 2009).

- Charles Hodges, "If you Twitter, Will They Come?" EDUCAUSE Quarterly, vol. 33, no. 2 (2010).

- Hans Aagard, Kyle Bowen, and Larisa Olesova, "Hotseat: Opening the Backchannel in Large Lectures," EDUCAUSE Quarterly, vol. 33, no. 3 (2010); and Jeff Young, "Professor Encourages Students to Pass Notes During Class — via Twitter," Chronicle of Higher Education, April 8, 2009.

- Tom Barrett, "Interesting Ways to Use Wordle in the Classroom," on Google Docs (2010).

- Jeroen J.G. van Merriënboer and John Sweller, "Cognitive Load Theory and Complex Learning: Recent Developments and Future Directions." Educational Psychology Review, vol. 17, no. 2 (2005), pp. 147–177.

- Although this is clearly a nontraditional approach to using Twitter, our team's read of the Twitter Terms of Service suggested that this use was in compliance with their expectations.

- Diana Oblinger and James Oblinger, "Is It Age or IT: First Steps Toward Understanding the Net Generation," in Educating the Net Generation,Diana G. Oblinger and James L. Oblinger, eds. (Boulder, CO: EDUCAUSE, 2005).

- Henry A. Landsberger, Hawthorne Revisited: Management and the Worker, Its Critics, and Developments in Human Relations in Industry (Ithaca, New York: Cornell University, 1958).

- Richard E. Clark and Fred Estes, Turning Research into Results: A Guide to Selecting the Right Performance Solutions (Atlanta: CEP Press, 2002).

- Katherine S. Cennamo, "Learning from Video: Factors Influencing Learners' Preconceptions and Invested Mental Effort," Educational Technology Research and Development, vol. 41, no. 3 (1993), pp. 33–45; G. Salomon, "Introducing AIME: The Assessment of Children's Mental Involvement with Television," In New Directions for Child Development: Viewing Children Through Television, K. Kelly and H. Gardner, eds. (San Francisco: Jossey-Bass, 1981) pp. 89–102); and G. Salomon, "Television is 'Easy' and print is 'Tough': The Differential Investment of Mental Effort in Learning as a Function of Perceptions and Attributions," Journal of Educational Psychology, vol. 76, no. 4 (1984), pp. 647–658.

- Albert Bandura, Self-Efficacy: The Exercise of Control (New York: Freeman, 1997).

- Bernard Weiner, An Attributional Theory of Motivation and Emotion (New York: Springer-Verlag, 1986).

- Yong-Mi Kim and Soo Young Rieh, "Dual-Task Performance as a Measure of Mental Effort in Searching a Library System and the Web," Proceedings of the American Society for Information Science and Technology, vol. 42, no. 1 (2006).

- Salomon, "Introducing AIME."

- Cennamo, "Learning from Video."

- Tom Rath and Barry Conchie, Strengths-Based Leadership: Great Teams, Leaders, and Why People Follow (Washington, DC: Gallup Press, 2009).

- Jude Higdon and Colin McFadden, "Beyond Clickers: Cloud-Based Tools for Deep, Meaningful Cognitive Engagement," presentation at the 2011 EDUCAUSE Learning Initiative Annual Meeting, February 14, 2011.

- Richard E. Clark, "Antagonism Between and Enjoyment in ATI Studies," Educational Psychologist, vol. 17, no. 2 (1982), pp. 92–101.

- New York Times Editorial Board, "Are They Students? Or 'Customers'?" New York Times, online edition, January 3, 2010.

© 2011 Jude Higdon, Kathryn Reyerson, Colin McFadden, and Kevin Mummey. The text of this EQ article is licensed under the Creative Commons Attribution-Noncommercial 3.0 license.