The calls for more accountability in higher education, the shrinking budgets that often force larger class sizes, and the pressures to increase degree-completion rates are all raising the stakes for colleges and universities today, especially with respect to the instructional enterprise. As resources shrink, teaching and learning is becoming the key point of accountability. The evaluation of instructional practice would thus seem to be an obvious response to such pressures, with institutions implementing systematic programs of evaluation in teaching and learning, especially of instructional innovations.

This would seem to be obvious. But is it the case? Although some institutions are doing leading and systematic work in this area, they are the exception and not the rule. Conversations with CIOs and other senior IT administrators reveal a keen interest in the results of evaluation in teaching and learning to guide fiscal, policy, and strategic decision-making. Yet those same conversations reveal that this need is not being met.

Apart from the fiscal and accountability pressures, the pace of technology change continues unabated. Facing so many options but constrained budgets, faculty and administrators must make careful decisions about support for teaching and learning: what practices to adopt and where to invest their time, effort, and fiscal resources. On an almost daily basis, faculty and their support staff must decide whether or not to use a technology or adopt a new teaching practice. This again argues for evidence-based practice that includes evaluations to inform future decisions.

Susan Grajek

Vice President of Data, Research and Analytics

EDUCAUSE

Is There a Doctor In the House?

In 1988, a keynote speaker at the American Medical Informatics Association conference provided an estimate of the proportion of medical practice that was evidence-based. Extending the definition of evidence even to case studies based on a handful of patients, his estimate was only 20 percent.

To what degree is the use of technology in higher education teaching and learning evidence-based today, even with a liberal definition of evidence? I suspect where we are today is where medicine was in 1988: positioned to move from little to abundant evidence.

Higher education is being held accountable for its costs, efficacy, and value more than ever before. Institutional leadership is requiring proof of technology’s return on investment. Just as patients have with health care, students are taking control of their learning in unprecedented ways, thanks to the abundance of available content and tools. They are also demanding—and responding to—evidence. Evidence can help us understand which technologies to expand and which to ignore and also how to make the best matches between technologies and specific learning situations or learners.

We are at the beginning of our journey. Our focus now is on what works for populations of students. As our body of evidence expands and our analytics expertise deepens, we will be able to determine how to tailor teaching to individual learners and how to track outcomes beyond passing a course or even attaining a degree. Over time, our standards for reasonable evidence will rise as we gain a deeper understanding of how to measure learning attainment and its application to a career and a life, not simply to a course or a degree. More campuses will create small, faculty-led centers of pedagogy to support learning analytics at a local level. The expertise and sophistication that the early leaders of this field possess will become widespread.

Remember this phase of learning analytics. You will—sooner than you think—look back on it as the beginning of a fascinating and very productive journey.

© 2011 Susan Grajek

The EDUCAUSE Learning Initiative (ELI) recently launched a program to address these opportunities and needs. Seeking Evidence of Impact, or SEI (http://www.educause.edu/ELI/SEI), is intended to bring the teaching and learning community into a collective discussion about ways of gathering evidence of the impact of instructional innovations and current practices. The program seeks to

- help the community gain a wider and shared understanding of “evidence” and “impact” in teaching and learning and thereby facilitate the campus conversations on this topic at all levels: administrators, faculty, students, professional staff, and external agencies (e.g., accreditors);

- establish a community of practice around seeking evidence of impact in teaching and learning to share results, as well as best practice in methodology;

- provide professional-development opportunities to support the teaching and learning community in becoming more effective in conducting impact evaluation;

- build a case-bank of examples and model projects as a community resource;

- explore successful institutional and political contexts that support the work of seeking evidence;1 and

- establish evidence-based practice in all teaching and learning innovations, such as mobile computing, learning space design, the use of social media, the evolution of the LMS, and learning analytics.

As an initial step toward these goals, we embarked on our own project to gather evidence regarding the current state of evaluative practice in teaching and learning. Suspicions and impressions are clues but are not by themselves sufficient. To that end, we worked with the SEI Advisory Group2 to create a survey designed to help us

- understand current practices;

- understand institutional priority and level of resourcing; and

- gauge the community’s interest and needs.

Do We Know What We Mean?

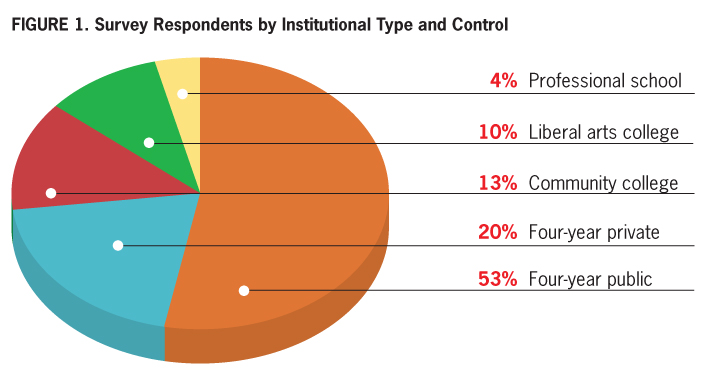

Of the 240 survey respondents, nearly half are from instructional support areas (instructional technology or instructional design), 19 percent work with an IT unit, nearly 9 percent are in senior administration, 8 percent are from the library, and 6 percent are faculty members.

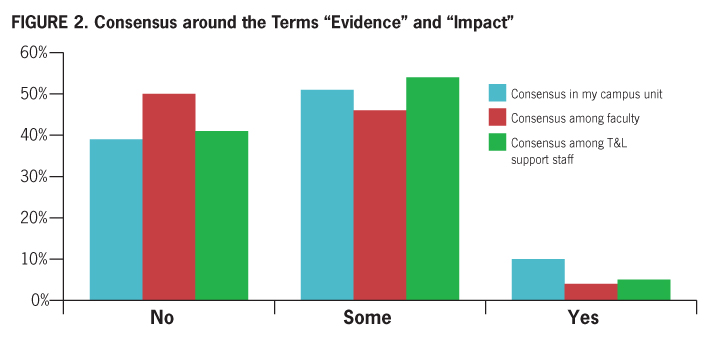

ELI very deliberately adopted the phrase “seeking evidence of impact” for this program. We thought it was important to ascertain whether there is agreement on campus among and across various constituencies about what is meant by “evidence” and “impact.” Perhaps not surprisingly, survey respondents reported there is relatively little consensus. Only 4 percent felt there was consensus among faculty, 5 percent noted consensus among support staff, and 10 reported consensus within their campus unit. As low as those percentages are, the survey participants responded there is at least some consensus around or common understanding of these terms.

These results are consistent with the picture that has emerged from the early work in the SEI program. Just what constitutes evidence of impact in teaching and learning is not straightforward. Learning is a very complex phenomenon to describe, much less to measure or evaluate. No doubt this lack of firm consensus makes the task of evidence-gathering all the more daunting. Helping the teaching and learning community to establish a wider and common understanding of these concepts is an important goal of the SEI program. This common understanding is the foundation of any campus dialogue about what is and what is not effective in teaching and learning. This shared understanding needs to inform every aspect of evaluation practice, ensuring that all participants and evaluators, as well as their audience, are speaking a common language.

Institutional Context

Several key questions relate to the extent of and the institutional basis for current impact analysis in teaching and learning. Which campus unit typically does the analysis? Does the unit do so in a collaborative and complementary manner with other units, or is it a lone ranger? Are these efforts done systematically or on an as-needed basis? And how is this work resourced?

We discovered a wide variation of practice in this area, echoing the disparity surrounding the understanding of basic terms. Some of the key findings in this area include the following:

- A clear majority of survey respondents (61%) stated that they were doing evaluation projects, yet only 30% stated that evaluation of teaching and learning was officially a part of their job description.

- Only 15% reported that they gather evidence systematically, and only 9% do so at the institutional level. About one-third report involving their institutional research office.

- Close to three-quarters of the respondents (71%) agreed they have an audience on campus that is receptive to reports on the results of evaluation projects.

These results reveal that the majority of the institutions do not feel compelled to undertake sustained, official research into the effectiveness of their teaching and learning practices. Further, at the schools that do conduct evaluations, the process is not usually done systematically or in concert with other campus groups.

The advantages of doing systematic evaluation at an institutional level are clear: it enables a comparative/longitudinal picture to emerge over time as trends are tracked across semesters and academic years, and it supports accreditation-related work.

Current Impact-Evaluation Practices

When asked to select, from a list of ten options, the top-three indicators of impact in teaching and learning, respondents identified the following:

- Reports and testimonies from faculty and students (57%)

- Measures of student and faculty satisfaction (50%)

- Measures of student mastery (learning outcomes) (41%)

- Changes in faculty teaching practice (35%)

- Measures of student and faculty engagement (32%)

This list suggests the indicators that are valued; however, respondents told us that surveys (86%), anecdotes (73%), observations (61%), and focus groups (50%) are the usual methods and techniques that staff use to gather evidence. Although it is perhaps not surprising that surveys are the most frequently used tool, we were surprised to learn that anecdotes are so heavily relied on. Surveys can be conducted asynchronously, readily lend themselves to quantitative data and analysis, and can reach a large population with few resources. Anecdotes, since they tend to be gathered in a haphazard way without any controls, can be the least convincing of all the techniques we asked about. The relatively high score for observations is also a bit surprising, due to the time needed to visit classes and to record and analyze the observations made. Also striking was how little confidence the community has in final grades as a measure of impact: only 12.5 percent put final grades in their list of the top-three indicators.

We also asked about other techniques that require more skill but that have a greater likelihood to draw an audience and influence decision-makers and practitioners. Respondents told us they use artifacts, or the assessment of work products (43%); data mining (36%); analytics (20%); and experimental research utilizing control groups (13%).

Charles Dziuban

Director, Research Initiative for Teaching Effectiveness

University of Central Florida

Impact in a Transformational Environment

Embracing the value of evidence for decision-making has a long history in the EDUCAUSE community—with initiatives such as the EDUCAUSE Center for Analysis and Research (ECAR) and the early transformative assessment agenda adopted by the National Learning Infrastructure Initiative (NLII). With the emergence of the EDUCAUSE Learning Initiative (ELI), however, and its emphases on improving educational effectiveness through technology, seeking evidence of impact has become even more important, for a number of reasons. The most important reason is that in the absence of data, anecdote can become the primary basis for decision-making. Rarely does that work out very well.

We can become overwhelmed by what seems like an insurmountable evaluation task, especially when we try to provide summative answers to questions such as, “Does technology enhance students’ learning?” As we consider our evaluation plans, therefore, we must try to appreciate that the vast majority of our work will be formative and, if effective, will foster ongoing conversations. At the beginning, it is best to start small; progress is better made in bite-sized chunks. Using a flexible network of colleagues to generate research questions can lead to immediate credibility and provide leverage for future work. If even a few people value our findings, we have taken an important step toward establishing an autocatalytic evaluation process—one that builds its own synergy.

We live by three principles: uncollected data cannot be analyzed; the numbers are helped by a brief and coherent summary; and good graphs beat tables every time. Even the most experienced of us are bumping along in this environment that changes with accelerating rapidity, but we know that there is genuine value to be found in seeking evidence of impact. Almost everyone needs credible information. We should do our best to provide it.

© 2011 Charles Dziuban

So here we face a bit of a conundrum: how can we support those involved in the work of seeking evidence in a way that maximizes the yield of their work or influence at our institutions? This again speaks to the need to engage in collaborative measurement work at multiple levels and to discuss and reach agreement on what constitutes convincing evidence and on the best methods and techniques to collect and share it.

The survey results also indicate a need for support in undertaking impact-evaluation projects. Respondents noted several key challenges they face in pursuing these efforts, all pointing to professional-development opportunities:

- Knowing where to begin to measure the impact of technology-based innovations in teaching and learning

- Knowing which measurement and evaluation techniques are most appropriate

- Knowing the most effective way to analyze evidence

Clearly there is a need within the community for basic skills. This is not surprising, since conducting impact evaluation in teaching and learning is a complex undertaking. How can we determine if students are learning Shakespeare “better,” if clickers are having an impact, or if the use of tablets has improved learning and the overall course experience? How can we isolate the variables well enough to be able to discern the specific effects that the innovation or practice introduces in the course experience?

The Audience

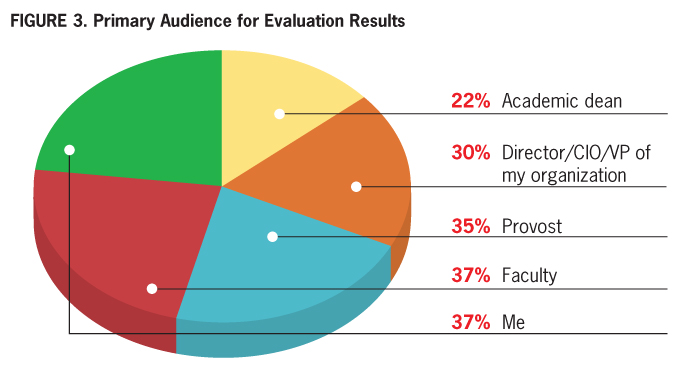

Who is the audience for evidence gathering and impact analysis? This question is arguably the most important and should serve as the starting point for any evaluation effort. If we don’t know who will consume our evidence—to make adoption decisions, to transform teaching practice, to take our classes—we are unlikely to know what kind of evidence to collect, how to collect it, and then how to communicate what we’ve learned.

Evaluation results by themselves rarely change current practices. For the results to be persuasive, an audience must receptively hear the evaluation outcomes and be willing to take action accordingly. The challenge of persuasion is what ELI has been calling the last mile problem. There are two interrelated components to this issue: (1) influencing faculty members to improve instructional practices at the course level, and (2) providing evidence to help inform key strategic decisions at the institutional level.

It is clear that the community knows the key audience for evaluation results. Given the demographics of the survey respondents—with the largest percentage in instructional design, support, and curricular technology—we first must convince ourselves that the technologies and innovations we introduce are having an impact. It also makes sense that faculty would be seen as part of the key audience, since they most directly affect campus teaching practice.

But constituencies sometimes disagree on the kind of evidence they consider persuasive, which adds additional complexity to the design of evaluation projects in teaching and learning. To address the last mile problem, practitioners must know the ultimate audience for their work and must design their projects accordingly.

Key decisions include the following:

- Whether the evaluation team should gather qualitative or quantitative data or a mix of both

- Which markers will best measure the impact of an innovation or practice

- Which evaluative rubrics will be most persuasive

- Whether the evaluation team needs to have cross-institutional participation in order to gain credibility with key audiences

- Which report formats and publication channels will best establish the persuasiveness of the study results

All of these factors need to be taken into account in order for the project findings to act as a change agent on campus.

Seeking Community of Practice

Through this survey and various other communications, members of the teaching and learning community have told us they would value the establishment of a community of practice around seeking evidence of impact in teaching and learning. The ELI survey has shown there are significant professional-development opportunities in the area of impact evaluation in teaching and learning. Broadly summarized, our results reveal a disparity between the keen interest in research-based evaluation and the level of resources that are dedicated to it—prompting a grass-roots effort to support this work.

J. D. Walker

Manager, Research and Evaluation Services

University of Minnesota

Habits of Highly Effective Researchers

Higher education leaders say they want more evaluation, more research, and more data to guide their institutions' use of technology, but rarely do colleges and universities systematically gather evidence of impact. Researchers can help change this situation by addressing some of its most common causes:

• Bad timing. Evaluation is frequently an afterthought, following the planning and implementation of a new educational technology, when it is often too late to conduct the most informative types of research. Solution: Get in on the ground floor so that data-gathering can be built into the planning process and so that fruitful research designs, such as pre-post measures and the collection of baseline data, can be utilized.

• Recalcitrant data. Honest evaluations may not support the desired conclusions; for this reason, data-gathering can be threatening. Solution: Good timing can help with this, by collecting data on a pilot project before full implementation and also by holding candid conversations with stakeholders prior to beginning an evaluation.

• Lack of expertise. Some aspects of research require specialized knowledge. Solution: Any systematic, replicable, thoughtful investigation guided by well-crafted research questions is likely to provide data that are far better than no data at all. Further, every institution should have educational research consultants who can provide assistance with research design, data-collection methods, and data analysis. In addition, there are numerous high-quality online resources.

• Poverty. Good research costs money, and budgets are tight. Solution: There is no way around this one. Good evaluations cannot be done on the cheap, and money is required not only to pay for staff time but also to provide incentives for research participants, hire data analysts, etc. That said, the costs of good research are miniscule in comparison with most expenditures on technology, and the cost of implementing an ineffective technology can be enormous.

© 2011 J. D. Walker

The picture that emerges is that whereas most schools conduct some degree of evaluation work, the institutional basis for that work is limited. A campus culture for evaluation work in teaching and learning is not firmly established at most colleges and universities. The evaluative effort is carried on primarily by individual teaching and learning support units, most often without official mandate or resource support. The majority of this work is also done without the help of the campus office of institutional research.?

We are convinced there are significant opportunities and needs in the area of evaluating the impact of instructional innovations and current practices. Through the Seeking Evidence of Impact program, we hope to work with the teaching and learning community to enable this evaluation and to analyze the results.

1. This is one of the key findings of the “Wabash Study 2010”: <http://www.liberalarts.wabash.edu/wabash-study-2010-overview/>.

2. The SEI Advisory Group members are Randy Bass, Georgetown University; Gary Brown, Washington State University; Chuck Dziuban, University of Central Florida; John Fritz, University of Maryland, Baltimore County; Susan Grajek, EDUCAUSE; Joan Lippincott, Coalition for Networked Information; Philip Long, University of Queensland; and Vernon Smith, Rio Salado College, Maricopa Community Colleges.

© 2011 Malcolm B. Brown and Veronica Diaz. The text of this article is licensed under the Creative Commons Attribution-NonCommercial-NoDerivs 3.0 License (http://creativecommons.org/licenses/by-nc-nd/3.0/).

EDUCAUSE Review, vol. 46, no. 5 (September/October 2011)