We—those of us in the higher education community—live in interesting times. For decades, higher education has occupied a relatively stable, trusted position in our society, as a place to invest our most precious resources: our youth, our minds, our future, our values.

Today, the purpose and value of higher education is under question and under transformation. A campus-based, full-time experience for 18- to 22-year-olds has given way to a much more diverse educational landscape and student demographic. What was once seen as requisite for completing the intellectual journey to maturity is now increasingly viewed as a necessity for employment and financial security.1 The value proposition is now more about economic security than self-actualization. But with rising costs and student debt (over two-thirds of college graduates are burdened with debt, whose average has risen to $24,000),2 and shifts in enrollment patterns, the public is questioning the affordability of a college education at the same moment a college degree has begun to be viewed as a necessity for job security.

With imperiled endowments, cuts in federal and state support, burgeoning costs of regulatory compliance, and the resulting institutional budget shortfalls, higher education leaders are hard-pressed to respond to calls for lowering the costs of higher education. The business model of higher education is broken.3

Within higher education, information technology is experiencing its own challenges:

- The maturation of commodity IT services and the financial pressures on higher education budgets are driving campus IT organizations to centralize and outsource back-end operations at unprecedented levels.

- Students, faculty, and administrators are both more and less dependent on IT operations and services than ever before. They still control the end-user device section of the procurement chain, but now there are more end-user device types, devices, interfaces, and use locations from which to choose, all with various support implications for campus IT services. End-users are using and appreciating external, web-based services that set the bar for responsiveness and features. By comparison, local web-based campus services often under-deliver and disappoint.

- From the most senior levels, IT leaders are being held accountable for the cost, quality, quantity, timeliness, and value of the services they deliver, the projects on which they embark, and the decisions they make.

- Externally, IT leaders are busy responding to, and often simply reacting to, constantly evolving security challenges and multiple, uncoordinated state and federal regulatory requirements.

Questions IT Data Can Help Answer

Efficiency

- What portion do IT costs compose of the total cost of college completion, course delivery, admissions, research, and other elements of higher education?

Effectiveness

- How do different types of IT environments affect college completion, research productivity, and administrative effectiveness?

- What are the best practices for using information technology to maximize learning outcomes, facilitate faculty productivity, and improve college completion?

- What is the "sweet spot" for institutional IT spending when balancing efficiency and outcomes?

Innovation

- Which IT environments are most conducive to innovation?

- What are the minimum IT requirements for supporting innovation?

If, as John Walda, President and CEO of the National Association of College and University Business Officers (NACUBO), has stated, “less is the new reality,” how do we do better—not more, not less, but better—with less?

Enter data. Enter research. Enter analytics. Analytics, and the data and research that fuel it, offers the potential to identify broken models and promising practices, to explain them, and to propagate those practices. Information technology now supports and enables all aspects of higher education. Information technology is paradoxically—and rightly—viewed both as an added expense and as a source of potentially transformational efficiencies. The great challenge for IT leaders and managers is to successfully resolve this paradox for their institutions. To do so, they will require the external models and internal evidence that data and research can provide.

Higher education IT data now needs to go beyond descriptive analysis—reports, queries, and drill-downs—to new ways of using data and research in order to align IT strategy with institutional strategy, plan new services and initiatives, manage existing services, and operate the IT organization on a daily basis. Higher education itself needs expanded access to high-quality data and analysis about information technology and its relationship to institutional efficiency, learning effectiveness, and college completion.

I Love You, You’re Perfect, Now Change

Existing research organizations and organized research activities to collect IT data across the higher education sector have not kept pace with the demand for more actionable and truly comparable information. Research on information technology in higher education is still essentially opportunistic and descriptive in nature—cataloging counts and tracking opinions.

This is to be expected from a new capability. The field of information technology itself is quite young; the practice of researching the field is even newer. Organized measurement and benchmarking efforts in higher education IT organizations began in the 1990s, with initiatives such as the COSTS Project (http://www.costsproject.org/) and the Campus Computing Project (http://www.campuscomputing.net/). The 1999 book Information Technology in Higher Education: Assessing Its Impact and Planning for the Future4 was seen as a harbinger of the concept that is now commonplace: “You can’t manage what you can’t measure.” The EDUCAUSE Center for Analysis and Research (ECAR) filled a void when it was created, just over ten years ago.

The field precedes its professionalization, with visionary “amateurs” leading the way. The word amateur originates from the Latin am?tor, meaning lover. It takes a cadre of intensely interested, creative, and competent individuals to create a field. Until well into the nineteenth century, science and scholarship were dominated by amateurs. By the twentieth century, professional scientists and scholars—with their access to restricted information sources and technologies and their many years of apprenticeship and training—predominated.5 We are likely seeing a similar transformation of IT measurement activities today as the amateurs who pioneered this field inspire institutions and individuals to professionalize it. These professionals are applying and adapting research techniques and measurement practices from related fields, including business research and IT benchmarking in industry.

The driving need for better research and better data, accompanied by a new professionalization of the field of IT research in higher education, will change the nature of the research, data, and analytics available to IT professionals and institutional decision-makers. Some of the changes will be visible, some will be behind-the-scenes, but they will all improve the alignment, planning, management, and operation of information technology in higher education.

Where are we today? What needs to change?

IT Research Today

As highlighted in “Surveying the Landscape,” the cover feature article for this issue of EDUCAUSE Review, many organizations are making significant headway in the study of higher education. Unfortunately, however, in much of today’s research on the field of information technology within higher education, data are collected from any individuals or institutions willing to participate, without regard to or reflection on the population to which the results will be generalized. These results are generally unweighted or otherwise adjusted to reflect the population being measured. As a result, research samples are neither representative nor consistent from survey to survey. The responding institutions from one survey might contain significantly more public than private institutions than responders from another survey, for example. Any comparisons made across surveys, or generalizations to a population that is quite different from the sample, are flawed from the start.

A vicious cycle of dwindling survey participation is further eroding the representativeness of the data. Participation in IT research is erratic. A few institutions participate regularly, many participate occasionally, and half or more do not bother at all to answer most surveys or inventories. Worse still, this pattern varies based on the type of institutions, with doctoral institutions generally showing far higher levels of participation than community colleges.

As response rates decrease steadily (a response rate of 25%, now highly satisfactory, would have been considered unacceptably low even ten years ago), requests to complete both formal surveys and informal listserv queries are increasing and are being distributed as universally as possible, generating a survey burden that erodes commitment, response rates, and data quality. Research organizations ignore one another and turn a blind eye to redundant efforts, further contributing to an unproductive abundance of IT survey research. We need to change our behavior—as researchers and as prospective respondents—to transform the vicious cycle to a virtuous cycle.

Finally, research about the field of information technology is often initiated without established objectives or an analysis plan. Consequently, surveys tend toward exhaustiveness without necessarily measuring the very things on which institutions need to base decisions. It is not uncommon for studies with over 300 variables to take 18 months to generate reports of over 120 pages.

Rigorous levels of statistical significance are applied during data analysis and the results of every significant comparison are carefully reported and interpreted without regard to the fact that chance alone will ensure a certain number of significant findings whenever many comparisons are made. More attention is paid to statistical significance than to practical significance, to sample size than to sample quality.

The most sophisticated analyses tend to be univariate or bivariate at best, even though some of the most important questions require multivariate data analysis. Univariate data analysis examines data elements, or variables, one by one. Bivariate analysis considers the relationship between two variables. In the real world, the decisions and issues of most interest transcend one or even two dimensions. Multivariate data analysis permits examining the interplay and impact of several factors at once. A significant multivariate finding trumps a significant univariate finding because it demonstrates that a high-level trend is actually due to a strong effect within a single subgroup. Limiting ourselves to univariate or bivariate analyses prevent us from understanding what is really happening.

IT Data Today

A similar disparity exists with data. IT practitioners have provided mountains of data to researchers over the past two decades. Those data are marooned in independent databases and are effectively discarded as soon as a particular study’s results are reported. The only exceptions are the frequently redundant survey engines that cycle annually or semi-annually and compare trends over the years, trends—as noted above—that are based on unrepresentative samples. Even if, however, we could collect all the data from surveys from the past several years, we would be hard-pressed to develop a useful repository. The samples across years are inconsistent, rendering year-by-year comparisons highly suspect.

In the absence of practical, timely, and actionable data, CIOs and other IT leaders find themselves resorting to identifying peer practices by e-mailing a set of questions to a listserv or a set of trusted colleagues and collecting whatever replies are received. There are no established data stores or convenient mechanisms to collect representative, just-in-time data to more accurately provide answers.

Benchmarking efforts tend to be created from first principles, and there are no standards within higher education information technology for measuring basic concepts such as availability, disaster recovery, or even costs. Attempts to apply standards from outside higher education often result in costly and uncomfortable fits that ignore IT services specialized for the core and unique missions of higher education and that strand and fragment data in multiple, competing, proprietary repositories to which the institutions that contributed data have no direct or ongoing access.

Efforts to measure across the industry have not been particularly comfortable either. Every institutional type—doctoral universities or community colleges, public or private institutions, multi-campus or single-campus institutions, international institutions or U.S. institutions—chafes under a one-size-fits-all data collection, convinced that the research instruments have been designed to suit an opposite institutional type.

We have an abundance of data today. We still lack, however, essential data to enable us to tie the IT budget back to IT services, to monitor and compare key indicators of service performance, or to establish and set aspirational targets for IT maturity and capabilities. College and university IT departments have been measuring user satisfaction for years; this was, arguably, the starting point for IT metrics in higher education. Yet every institution measures it differently, so we cannot responsibly benchmark even such baseline and established a metric as user satisfaction across institutions.

IT Analytics Today

IT analytics aren’t even on the radar. Analytics would require agreed-upon and consequential outcomes, consistent definitions, consistently collected data, and a data-store large enough to analyze.

Analytics is key to helping higher education understand and manage its costs and outcomes,6 which are coming under increasing scrutiny. Analytics brings the ability to make sense of complex environments and to make decisions based on the new understanding that emerges. Implementing analytics and applying it to make data-driven decisions is a differentiator between high-performing and low-performing organizations.7

Effective analytics requires good data, which is not only the right data to understand performance and outcomes but also data that is standardized across all the environments being measured. Higher education is just now recognizing the importance of analytics and also the lack of good data, which is needed to be able to apply analytics to its most pressing challenges.

Government interest in higher education costs, performance, and funding is increasing. Where government interest begins, regulations often follow. The Common Education Data Standards (CEDS) initiative (http://nces.ed.gov/programs/ceds/) is currently a voluntary effort but may presage future regulatory directions. Accountability demands extend beyond regulation: the public and the media are questioning not just the expense but the very value of higher education. Stephen Wolfram has asked: “What’s the point of universities today? Technology has usurped many of their previous roles.”8 His question is perhaps more provocatively worded, but others are similarly questioning what used to be a given.

If those of us in higher education information technology fail to identify the right data to measure and the right ways to measure it, others will enter the breach. We will likely have data standards and reporting requirements thrust on us from external sources. We will be asked to demonstrate accountability according to measures that are not of our making, and that may very well be not to our liking.

It is at this time, when we have so little truly actionable or usable data, that we most need high-quality data and research. The assessment movement is inching closer to higher education IT services. It is already upon us within our individual institutions—with CFOs, CAOs, and presidents demanding metrics, dashboards, business cases, and business intelligence to support their understanding and decision-making. Information technology, which has been instrumental in building data warehouses for financial and administrative data, finds itself coming up short. We are recycling old metrics that were defined a decade ago and based on casually collected data, ignoring the solid IT performance and maturity measures that are being established in other industries. We have begun to cry out for peer comparisons and benchmarks, and the best just-in-time data we can generate come from a handful of institutions that reply to listserv pleas.

We must do better. Fortunately, we can.

| The Questions IT Leaders Must Answer | The Current Benchmarking Data | The Needed Data |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

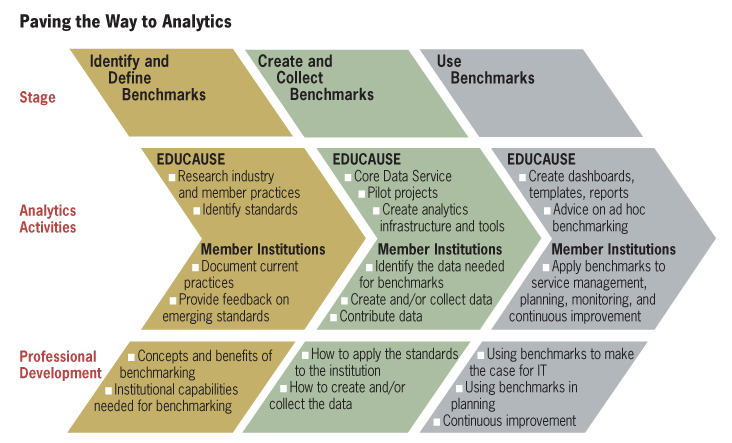

Maturing IT Research, Data, and Analytics

Data, research, and analytics activities for information technology—just like project management, service management, process management, and a set of other IT management activities—need to follow a developmental arc, maturing from the purview of a handful of pioneering individuals who developed the templates in use today to a professional subdiscipline with a clear set of purposes, processes, and outputs. This has already begun in individual areas (help-desk research is particularly mature, for example) and in individual institutions. It is time for the profession to thoughtfully define and build data, research, and analytics capabilities and services to meet current and coming challenges, as well as to design and scale them to work effectively across institutions.

On campus, the data needed for higher education analytics are scattered throughout various offices, but two departments generally organize and report on institutional data: institutional research and information technology, with the former focusing on academic data and external institutional reporting and the latter on administrative and institutional operational data. Although these departments are combined in some institutions, they are separate in approximately nine out of ten colleges and universities, according to the 2010 survey of the EDUCAUSE Core Data Service (http://www.educause.edu/coredata).9 Much of the data needed to quantify faculty performance and student outcomes lies outside both groups, in other departments or professional schools.

Information technology is uniquely positioned to organize, standardize, collect, compile, and deliver data and analytics. Information technology possesses a critical mass of institutional performance data, along with the data warehousing and business intelligence capabilities to understand how to manage complex and diverse collections of data. This opportunity for information technology to lead the institution in higher education analytics comes at a time when many in the profession are sensing a declining influence: Will information technology become a “plumbing shop,” or will it remain a strategic unit led by a C-level executive who is part of the presidential cabinet? It may very well be that leadership of the institution’s analytics is the key to retaining that strategic seat at the table.

EDUCAUSE Actions

The EDUCAUSE mission is to serve higher education through the effective use of information technology. “Effective” is a judgment based on data and analysis. EDUCAUSE currently has two major services for data and analysis: the Core Data Service (CDS) and the EDUCAUSE Center for Analysis and Research (ECAR). It is time for both to evolve to meet new thresholds of accountability, to leverage the opportunities present in the maturing field of analytics, and to meet the community’s pressing and growing need for actionable research, representative data, and the ability to use that data to make decisions.

As the professional association for information technology in higher education, EDUCAUSE can serve as a resource hub and coordinator of efforts to organize and standardize data, reorient research, develop cross-institutional analytics capabilities, and deliver the professional development that institutional leaders and IT professionals need to be able to measure their own performance, use data to make decisions, and implement continuous-improvement initiatives based on actively monitored data.

ECAR Research

New Directions for EDUCAUSE Data, Research, and Analytics

ECAR

- Fewer, shorter surveys

- Focus on actionable findings

- Shorter reports

- Faster timeframe to execute research

- PowerPoint versions of reports

- "Research hubs" to organize a rich set of resources for each major study

Core Data Service

- More—and more useful—reports

- Reoriented content focusing on IT metrics, KPIs, maturity indices, and costs

- Non-IT data sources

- Relevant content from recent ECAR surveys

- Metrics, benchmarking, and continuous-improvement content for use in EDUCAUSE professional development activities

ECAR’s research is evolving. Future research will concentrate on addressing issues that are most important to higher education information technology and on providing actionable results. ECAR staff will mine existing data-stores. By using data that institutions have already provided, data from recent surveys, data from the Core Data Service, or data available from external data-stores, ECAR can answer many questions without collecting new data.

ECAR has adopted a sampling methodology for collecting data. A representative sample of only one-half or even one-third of member institutions will be asked to complete surveys. With concerted and targeted follow-up efforts and appropriate incentives, response rates will increase and research samples will be more representative of higher education institutions. All this will result in fewer and shorter surveys for member institutions. ECAR studies will move from planning to publication in four to six months.

ECAR has also redesigned the forms and formats for communicating research results. Each major research study will have a dedicated “research hub,” or microsite, with a rich collection of materials tailored to the topic.

Core Data Service

The Core Data Service (CDS) is changing to meet changing requirements. The survey will decrease in length. To facilitate integration across data sources, a subset of items from the most recent ECAR surveys will be added to each year’s survey.

EDUCAUSE is laying the groundwork to develop an analytics capability based on the CDS. In the coming months and years, the CDS will evolve to incorporate more industry-standard key performance indicators, maturity indices, and benchmarks. EDUCAUSE will bring together members to design the content, adapt IT industry standards to higher education when necessary, and ensure that measures are flexible enough to apply to all types of institutions.

The CDS is also integrating and affiliating with other data sources to foster the development of a “data commons” for higher education IT data. As a modest beginning, more data from the Integrated Postsecondary Education Data System (IPEDS, http://nces.ed.gov/ipeds/) have been added to this year’s CDS, as well as summary salary data from the annual compensation survey of the College and University Professional Association for Human Resources (CUPA-HR, http://www.cupahr.org/). IPEDS, a program of the National Center for Education Statistics, uses a series of surveys to collect data about higher education enrollments, program completions, faculty, staff, and finances. Over time, EDUCAUSE also hopes to revise the benchmarking interface of the CDS to enable contributing institutions to interact with the data more fully and easily.

Professional Development

The move to benchmarking and analytics cannot be achieved without helping EDUCAUSE member institutions improve their capabilities in this area. Institutions that have not begun to measure and benchmark their costs and services need help getting started. Institutions that are already collecting benchmarks and monitoring services need help initiating continuous-improvement programs to use the data to make changes and decisions.

Community Actions

Everyone in the higher education IT community has a stake—and a role to play—in the improvement of IT data and research. CIOs and IT leaders must become literate about the value and uses of data to make the case for the value of information technology in higher education, to guide wise investments, sourcing decisions, and service improvements, and to align the activities of the IT organization with the institution. They must also develop the roles and capabilities their IT organizations need to create, collect, and use data. IT leaders need to challenge themselves to use those capabilities on behalf of the entire institution, not only information technology. Institutional leadership should consider the untapped potential of information technology in connecting, organizing, and managing institutional data and in helping leaders use data to make decisions.

How IT Professionals Can Improve Data and Research

- Develop metrics and benchmarking literacy.

- Identify the data needed for metrics and benchmarking and begin to collect it.

- Put data to work with continuous-improvement activities.

- Use data to make the case for the value of information technology to institutional priorities.

- Contribute to the Core Data Service.

- Participate in ECAR surveys when invited.

IT managers and professionals must understand the metrics standards that apply to their areas and how to create or collect the data needed to derive those metrics. The IT organization must develop the skills and habits of using data to identify opportunities for continuous improvement. Continuous improvement is itself a capability that IT organizations must master.

Moving beyond each institution, IT leaders can contribute to developing a data commons for higher education information technology by providing their institution’s data. EDUCAUSE is a steward of data on behalf of its member institutions. The great accomplishment of the CDS has been to foster a community of trust and openness around collective data. Over time, as IT organizations develop their internal metrics and benchmarking capabilities, completing the CDS will become a simple matter of entering the data that IT organizations already use to plan, manage, and monitor their services.

The Future

The professionalization of IT research, data, and analytics is necessary if higher education is to achieve full value from information technology. By building a data and analytics infrastructure that is created and owned by higher education itself, higher education institutions can control the pace, cost, and outcomes. One of the likely outcomes will be to come full-circle: higher education’s application of data and research to the management of information technology began with self-taught IT professionals. A dedicated set of investments and activities staffed by data and research professionals will ultimately give IT professionals and institutional leaders even richer opportunities to make data-driven decisions.

1. Pew Social Trends Staff, “Is College Worth It?,” Pew Research Center, May 15, 2011, <http://pewsocialtrends.org/2011/05/15/is-college-worth-it/>.

2. Diane Cheng and Matthew Reed “Student Debt and the Class of 2009,” The Project on Student Debt, October 2010, <http://projectonstudentdebt.org/files/pub/classof2009.pdf>.

3. John Walda, “Top Issues Facing Today’s Business and Financial Officers,” EDUCAUSE Live!, August 10, 2011, <http://www.educause.edu/Resources/TopIssuesFacingTodaysBusinessa/233342>.

4. Richard N. Katz and Julia A. Rudy, eds., Information Technology in Higher Education: Assessing Its Impact and Planning for the Future (San Francisco: Jossey-Bass Publishers, 1999), <http://www.educause.edu/Resources/InformationTechnologyinHigherE/160438>.

5. David D. Friedman, Future Imperfect: Technology and Freedom in an Uncertain World (New York: Cambridge University Press, 2008), chapter 9, “Reactionary Progress: Amateur Scholars and Open Source,” p. 123.

6. Phil Long and George Siemens, “Penetrating the Fog: Analytics in Learning and Education,” EDUCAUSE Review, vol. 46, no. 5 (September/October 2011), <http://www.educause.edu/library/ ERM1151>.

7. Steve LaValle, Michael S. Hopkins, Eric Lesser, Rebecca Shockley, and Nina Kruschwitz, Analytics: The New Path to Value, MIT Sloan Management Review and IBM Institute for Business Value, 2010, <http://sloanreview.mit.edu/feature/report-analytics-the-new-path-to-value/>.

8. Wolfram quoted in “Alpha Geek,” The Economist: Technology Quarterly, June 2, 2011, <http://www.economist.com/node/18750658>.

9. Data were collected between April and July of 2011. The Institutional Research and the IT departments are part of the same organization in 9% of colleges and universities. Interestingly, this is similar to the share of institutions (11%) in which the library and the IT department are co-joined.

© 2011 Susan Grajek. The text of this article is licensed under the Creative Commons Attribution-NonCommercial-NoDerivs 3.0 Unported License (http://creativecommons.org/licenses/by-nc-nd/3.0/).

EDUCAUSE Review, vol. 46, no. 6 (November/December 2011)