Big data and cloud infrastructures are changing the research landscape across higher education, making collaborations among disciplines globally not only possible but highly desirable to faculty and students alike. Several case studies illuminate new research strategies and collaboration examples that these increasingly available technologies make possible.

Peter M. Siegel is CIO and vice provost for Information Technology Services, University of Southern California.

New technologies and cloud infrastructures are changing the landscape of research, not just in computer science, traditional informatics, and high-performance computing (HPC) areas but also more pervasively across academic disciplines. Quantitative changes, including fundamental changes in scale, are leading to qualitative changes in research strategies. Big data and cloud technologies — mere buzzwords a few years ago — have matured and become essential for research computing; they also make faculty collaborations nationally and globally not only possible but also extraordinarily important for universities.

In this article, based on my EDUCAUSE Live Webinar,1 I address how we can better prepare faculty and students for the types of collaboration possible in the evolving world of cloud storage, big data, fast networks, and dazzling visualizations, and offer case studies to further illuminate research strategies and opportunities.

Rethinking Institutional Support

Our most important job as CIOs and IT leaders might well be to boost faculty research, facilitate teaching, enable our faculty to be as competitive as possible, give our students meaningful research experiences, and enhance the reputations of our universities. Whatever the approach, whatever the themes, it is crucial to pay attention to what matters most for particular kinds of research, programs, or applications.

In higher education, the landscape is changing rapidly. Federal and state agencies clearly have limited funds. If you visit the websites of major federal funding agencies, you will see that most emphasize the need to focus on interdisciplinary research and multi-institutional collaborations. In addition, research investments from federal agencies increasingly go to projects specifically tied to informatics and digital learning.

Faced with this new landscape, we must ask ourselves the following questions:

- What is needed beyond infrastructure to support research?

- What specifically are we doing to assist our faculty?

- How do we measure ourselves when it comes to supporting global research?

- Do our programs support formal, measurable activities to engage students (as future faculty) in the research enterprise?

Although we do not yet have all the answers, we should address these and other related institutional questions as we consider our path forward.

Trends in Research Computing

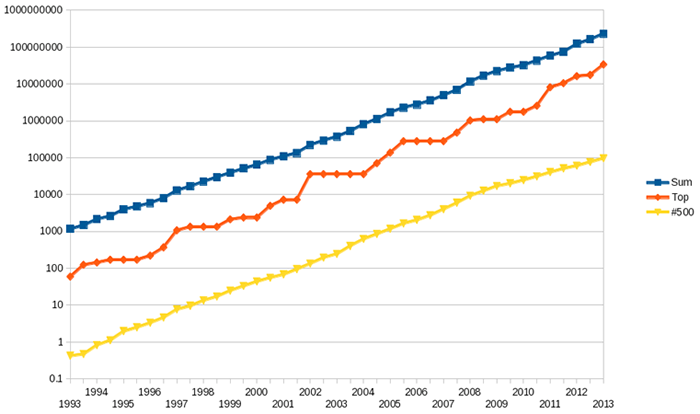

Essential research technologies are really coming into their own. A key example is HPC, as the graph from Wikimedia Commons illustrates. Figure 1 shows the numerical performance of the top 500 supercomputers over the past 20 years in GFLOPs — or billions of floating-point operations per second — which, while not an ideal measure, still provides a sense of the trending of HPC systems within many of our faculty members' reach. The orange line in the middle represents the world's fastest high-performance computer; the yellow line at the bottom is the 500th-fastest, while the blue line at the top is the aggregate of all of the world's top 500 high-performance systems.

Figure 1. Numerical performance of the top 500 supercomputers (in GFLOPS)

Despite funding reductions at many institutions, these systems continue to grow at approximately the same rate, with no signs of slowing. In addition, new countries have emerged as supercomputing powers. China, for example, had the top performing system in 2013. Rather than seeing China's ranking as a setback for U.S. institutions, we might view it as an indication that there are more peers in this space; it also might incentivize U.S. researchers to partner with colleagues across the world to ensure effective access to the most powerful systems.

Institutions can provide access to HPC systems in three ways:

- A "condominium" model, in which processors are shared among a pool of investors while guaranteeing individual researchers priority access to their own systems

- Development environments that allow each research group to develop and optimize its code locally before moving to powerful systems at national labs or other resources

- A formal research arrangement with another university or national or regional research facility

It is very important to be part of this broader ecosystem. You do not have to own it; you merely need institutional access to it — usually through collaboration and strong network planning.

It is a positive sign when your faculty members partner with institutions having enormous capabilities in places like China; in such cases, your best investment is often to ensure the end-to-end telecommunications (via partnerships), rather than to invest in less adequate computational systems of your own.

So, what about telecommunications? Strong national programs exist to help campuses move forward with high-performance networking. The U.S. National Science Foundation's Campus Cyberinfrastructure-Network Infrastructure and Engineering (CC-NIE) program provides funding to campuses to improve instrument performance and capabilities for individual applications. It has had great success and prompted many of us to work together to learn how to provide new capabilities.

As figure 2 shows, a clear direction in research computing exists nationally that your campus can build on, including Internet2 and state networks such as the Corporation for Education Network Initiatives in California (CENIC), New York State Education and Research Network (NYSERNet), and many others. Sure, we have challenges with the last five miles when connecting our campuses, but things are moving forward. A key question, however, is where we are going next. The map of the United States — with many more lines throughout every state — must connect to a global map that shows how we can help researchers on one campus collaborate with partners in Japan, China, Europe, or other parts of the world. Our next step is to create the kind of infrastructure across the world that we have built in the United States to connect our vital research infrastructure.

Figure 2. The 100-gigabit Advanced Networking Initiative (ANI)2

We are truly moving into the age of "global instruments." One institution might have a facility with a visualization capability, while another has an imaging facility, and a third has medical devices integrated with the environment. Rather than imagining all the research "core" resources as existing on one campus, we are increasingly sharing research infrastructure with peer institutions in a formal, strategic way. And, while telecommunications gets it there, the next frontier is the management of "big data."

Big Data

Big data, while a term that is in common use, is a bit of a misnomer: big data is not simply a matter of size. The notion of big data comprises volume, size, and velocity, and also poses the questions of how to move large data sets around and define a vision that makes sense of them.3

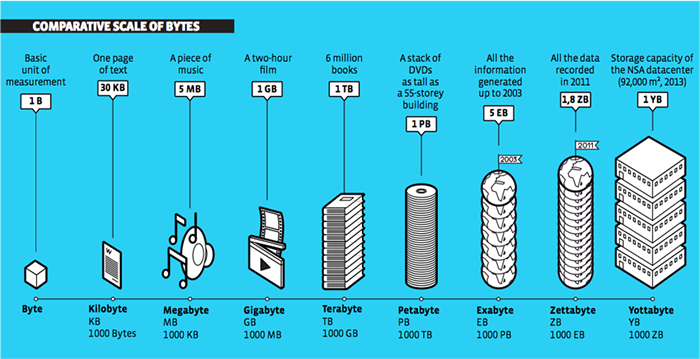

We have all seen slides similar to figure 3. The terabyte went from being an exotic term to a consumer standard on practically every computing system. We are now moving the scale of typical, large-end research applications to the petabyte range. The Large Hadron Collider (LHC) generates roughly 30 petabytes of data per year.4 The Large Synoptic Survey Telescope is expected to generate 15 terabytes of raw data nightly.5 The storage capacity of the new National Security Agency data center, although classified, is rumored to be in the yottabyte range,6 which is a thousand zettabytes, which is a thousand exabytes. Very large data capabilities are moving into the range of most research faculty, and even some consumers.

Courtesy Patrice Koehl, UC Davis

Figure 3. Comparative scale of bytes

We do not, however, always have the capability to provide such data volumes on our campuses. As a result, researchers who do not necessarily need the compute cycles provided by HPC may request them simply to access large data sets. It is important to understand that compute cycles and large data sets are synergistic, but not the same thing. A campus storage strategy will impact the humanities, creative arts, and many other areas in ways that have come slowly to HPC (related mostly to HPC software's complexity). Additionally, we often think of petabytes of data as generated by research in the physical sciences and engineering, but researchers in other disciplines also study data (including Internet data) that are intrinsically large.

Data Transfer and Management

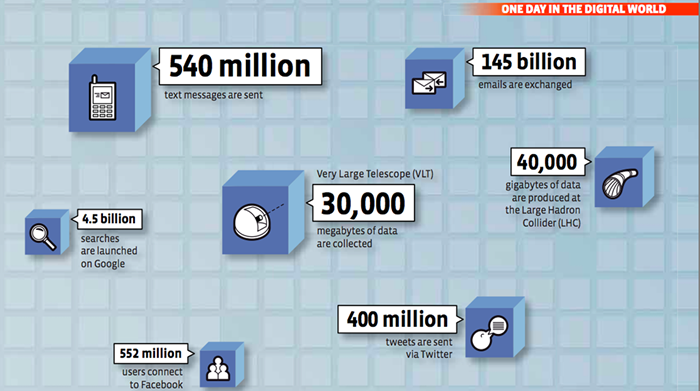

The existence of large data sets raises the questions of how to provide those data to our individual applications and how to transfer the data from one institution to another. Figure 4 gives a sense of the scale of common aggregate services.

Courtesy Patrice Koehl, UC Davis

Figure 4. One day in the digital world

Transferring a large repository of Alzheimer patient MRI images or the multimedia testimonies of genocide witnesses could easily use 5 to 10 percent (or more) of a 100-gigabit line across the country.7 Given this, there is an urgent need to find ways to manage these large data sets efficiently and securely. None of us have the answers now on how to put critical institutional data on the global Internet with confidence.

One dean said to me, "I need all my information on campus so that we can make sure to manage it before it goes out to everybody." Despite that sentiment, however, economics will increasingly force our data into the cloud over the next two years. Some institutions are forcing the issue by reducing or ending investments in on-campus data centers. Solutions must and will be found that intelligently manage community data in a hierarchical, distributed fashion.

The model of downloading as much as possible from national (or global) repositories and then figuring out how to handle the data locally is an enormous waste of time, money, and bandwidth. The best models (known collectively as "slice and dice") will let us visualize the data in place and pull down just the data that we need. This is where we're going. It's a tall order, but we are on our way.

Cloud Computing and a Global Infrastructure

Cloud systems will be a critical part of our infrastructure. We will have a hierarchical model, which will include departmental systems anchored by systems at the institutional level for efficiency reasons. Next, existing community- and vendor-provided cloud services will increasingly be embraced as minor legal issues are worked out, so that we can support this full hierarchical range of capabilities and have our faculty members still depend on and trust us for good advice — and information on where the various systems are located.

In 2012, NASA's Piyush Mehrotra did an assessment of cloud computing use for NASA's specific HPC applications. Although the associated formal study concluded that, in 2012, cloud systems were not yet ready to handle NASA's needs, it documented very crisply the key future requirements. From my own review, it was becoming clear by 2013 that cloud systems appeared well along in meeting the documented core requirements. According to my understanding of the study, this year (2014) should be the year that cloud computing is ready to take on NASA's HPC applications.8

Recently, with an investment of about $33,000, Mark Thompson, a professor of chemistry at the University of Southern California (USC), accessed a top 500 supercomputer with 156,000 processors for 18 hours, solved a set of problems, and achieved publishable research results involving the behavior of 205,000 molecules.9 This was real science done through ad hoc use of the cloud: resources were deployed that could be discontinued a day or two later. Although Thompson was pleased with the cloud project's overwhelming success,10 he noted that, for many of the "what if" problems that he and his graduate students were tackling, his own clusters or campus clusters were easier to use and often more efficient (allowing them to tackle very focused problems step by step). Thompson is a leader in demonstrating the value of a hierarchical service model that lets even a single research group exploit systems at multiple levels on campus and in the cloud.

Quantum Computers and Other Future Technologies

Quantum computers are one example of new and innovative systems on your campus. Such systems are ideal for breaking cryptographic codes, which could provide a competitive edge to researchers in security. More traditionally, large database searches in areas like medical informatics might in the future take place on quantum computers. Even though these computers might currently have a niche role on your campus, by the time your students become faculty members or are in industry directing an R&D operation, access to such systems might be the key competitive advantage. Can you help make such research systems available to your students?11

Global networks and cloud services also are developing well as core research infrastructure. We must do more to move them from large-scale commodities (moving e-mail and YouTube videos) to linked, powerful research infrastructures that will soon span the world in the thousands. The next generation of faculty is here, and their expectations are enormous. Faculty members see what can be done in the global cloud with a set of "apps" that follow them anywhere; they expect as much from our campuses — global networks and the power of the cloud.

Case Studies

Every campus has its own examples, but, as these case studies show, a common pattern is emerging of sophisticated users with significant demand for interdisciplinary collaborations on a global basis. Students and faculty alike are eager to engage with sophisticated computational systems through rich multimedia.

Case Study: Shoah Foundation Institute

The USC Shoah Foundation Institute (SFI) collects testimonies from survivors of and witnesses to current and historical genocides around the world. Its work is aimed both at dissemination and education — and at ensuring that the data that relate to these horrific incidents are preserved objectively for the next 100 years and beyond.

The institute's Visual History Archive (VHA) is about nine petabytes of data and growing. It contains 51,696 testimonies in 33 languages, representing 105,000 hours of testimony from 57 countries. The archive has 235,005 master videotapes. To fulfill its worldwide educational mission, the USC SFI required a cost-effective, efficient way to transfer analog videotapes to digital format, as well as to share this data with students and researchers around the world, while at the same time correcting deficiencies in the recording process.

A key aspect of many (but not all) big data problems is that they have HPC components. The accompanying video shows the results of some innovative algorithms for correcting video recording errors. Given the volume of data, high-speed networks and computing engines make the process highly effective and, in many cases, automatic.

The video correction process (courtesy of SFI)

Given the critical subject matter, these data sets are part of an international record that must be preserved forever. In practical terms, this means ensuring that the materials can be viewed 100 years from now. The next video illustrates key components of long-term preservation, focused on ensuring that even minor bit errors are corrected long before uncorrectable "bit rot" takes place (which can occur quite quickly given the large amounts of data).

The next two videos demonstrate other ways in which the archive relies on HPC — in this case USC's 500-plus teraflop system.

Preservation (courtesy of SFI)

High-performance computing center (courtesy of SFI)

Research networking (courtesy of SFI)

Finally, SFI's mission is to provide educational content and student-friendly software to manipulate that content. The archive streams videos over Internet2 to more than 50 universities, high schools, and institutions around the world.

Streaming microorganisms (courtesy of Richard Weinberg, USC School of Cinematic Arts13)

Case Study: Streaming Marine Biology

This case study highlights the work of Richard Weinberg, research associate professor in the USC School of Cinematic Arts, who used a new generation of super-high-resolution tools to both enable digital cinema microscopy and unite marine biology with cinema technology. Weinberg received the Innovations in Networking Award from the Corporation for Educational Network Initiatives in California (CENIC) for his work magnifying and streaming images of marine microorganisms that are invisible to the naked eye. As Weinberg stated, "Imagine magnifying a microorganism that is invisible to the naked eye, capturing it with a digital camera through a microscope, streaming it from L.A. to Tokyo and projecting it there live with the quality of today's digital cinema theaters. It gives the audience the opportunity to experience the microworld as never before."12 With applications for education, research, and medicine, this project required a special microscope, capable of magnifying the microorganisms to 33,360 x 10,349 pixels, and access to a global, high-speed educational network. Weinberg's work not only provides an example of moving the state of the art forward, but it also shows what we might call radical interdisciplinarity, whereby researchers from truly disparate fields work together to shed new light on a subject.

Case Study: Brain Imaging and Genomics — The ENIGMA Project

We all know the criticality of moving the global medical establishment fully into an electronic world, not only to help control the high cost of care (which has been estimated to have reached almost 18 percent of the U.S. GDP14), but just as importantly to reap the insights that come from sharing the high-volume, high-value information contained in medical records, images, and laboratory results. A report from McKinsey & Company estimates that Kaiser Permanente's HealthConnect computer system "has improved outcomes in cardiovascular disease and achieved an estimated $1 billion in savings from reduced office visits and lab tests."15

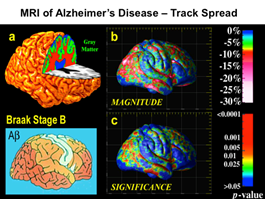

Today, we estimate that health systems across the world store more than 100 exabytes of data (1 exabyte equals 1016 bytes).16 This case study demonstrates an area in which the factors involved in a devastating medical illness such as Alzheimer's are so subtle that very large data analyses are essential to sort the wheat from the chaff. The Enhancing Neuro Imaging Genetics through Meta Analysis (ENIGMA) project was founded by Paul Thompson, professor of neurology, psychiatry, radiology, engineering, and ophthalmology and director of the USC Imaging Genetics Center; and Nick Martin, senior scientist and senior principal research fellow, QIMR Berghofer Medical Research Institute. ENIGMA is a $140 million brain-imaging study sponsored by the US National Institutes of Health and based on the brain scans and genomic data of more than 26,000 people. The project includes a global consortium of researchers at 125 institutions who have amassed numerous super-high-resolution brain images with the goal of identifying the changes in DNA code that cause Alzheimer's and other diseases. In some cases, brain decline can be caused by a single letter change.

This is truly a global-scale partnership requiring high-resolution MRI systems to share information on a large and growing number of patients. Although faculty know how to build partnerships across the world, what they lack is a set of tools to build the physical infrastructure to connect up their building blocks. Although regional and national networking infrastructure is maturing, how do we ensure that petabytes of data can be moved on a regular basis for individual projects such as this on a shared bit highway? Who decides the best approach (and best for whom)? Who decides the priority? Who pays?

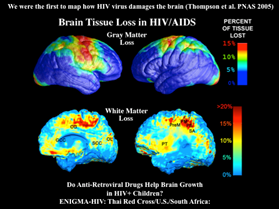

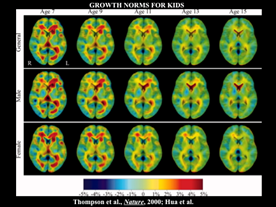

Figures 5, 6, and 7 show images from the ENIGMA project.

Courtesy of Paul Thompson, USC

Figure 5. MRI tracking the spread of Alzheimer's in the brain

Courtesy of Paul Thompson, USC

Figure 6. Loss of brain tissue with HIV/AIDS

Courtesy of Paul Thompson, USC

Figure 7. Norms for brain growth in children

The Grand Challenge Internship Program (courtesy of the Southern California Earthquake Consortium)

Case Study: Undergraduate Education

Innovation in the future depends on engaging our most important resource — our students. This case study focuses on undergraduate education and offers a great example of faculty members involving undergraduates in their research. Directed by Tom Jordan, W.M. Keck Foundation Professor of Earth Sciences at USC, the Southern California Earthquake Consortium (SCEC) integrates HPC capabilities into the curriculum, giving undergraduates access to the same advanced systems that researchers use. When these students graduate, they can say they have used some of the most advanced computing systems in the world.

The ability to bring real research experiences to our undergraduates — whether at our home institution or at a partner's site — is a key goal, and central IT organizations are often in a position to create economies of scale in providing such services to faculty and students. Think of the advantage to your undergraduate students if they are able to publish papers before they enter graduate programs or find jobs in the field.

Case Study: Real-World Applications of Nano Bubbles

The final case study is a set of large-scale visualizations that relate to a traditional materials application. The first set of videos, provided by Priya Vashishta, USC professor of materials science, computer science, and physics and astronomy, illustrates the effects of breakable water on a silica plate, which helps researchers study how water reacts under compression — that is, how water will change, decompose, and react under pressure. The first video simulates an empty bubble, while the second demonstrates how damage to the silica plate is reduced by filling the bubble with helium gas.

Nano-bubble collapse under shock (courtesy of Priya Vashishta, USC)

Nano-bubble collapse under shock (gas) (courtesy of Priya Vashishta, USC)

Although these might look like other simulations you have seen over the past 10 years, a key difference here is that these are likely far larger (and more accurate). The simulation was done at the Argonne National Laboratory on the Blue Gene/P, with 164,000 processors. It required 65 million continuous compute hours, at a cost of about $6.5 million. This is a one-billion-atom simulation — one of the largest such simulations ever done.

This research has implications across many industries, including nuclear waste storage and disposal. Further, related research shows microscopic mechanisms under high-velocity impact by simulating the behavior of a particular ceramic material — aluminum nitride, which is used in bullet-proof vests — when pierced by a hard impactor, such as a bullet. In the first example, the bullet is considered "infinitely hard" and pierces the ceramic plate completely. The second simulation represents a "real bullet" that decomposes in the ceramic plate; if used in a real vest, this material would literally be a lifesaver.

So, indeed, this is basic research regarding the nature of specific materials, but it matters in so many ways: in the ground-breaking scale of the solutions and in how it might lead to practical, real-world solutions.

Infinitely hard impactor (courtesy of Priya Vashishta, USC)

Real impactor (courtesy of Priya Vashishta, USC)

Discussion

As this exploration of key issues shows, there are several important things to keep in mind as we move forward. First, as CIOs and IT administrators, we are investors in a continuing research revolution with global and multi-disciplinary impact, and we play a key role in the outcome. We must ensure that we understand and communicate the kind of impact that we can have, including to our senior administrators, who will also benefit from an awareness of just how profound the changes have become in the nexus between innovative research and global information technology.

Second, I often hear people say that there isn't much going on in the nontraditional disciplines. As I've shown here, this could not be further from the truth. Many people on campuses across the world are ready to push the envelope in these areas. They might not use facilities on their own campus, perhaps because of costs or because they aren't aware of available technologies or maybe because they require additional technical support to get under way. In any case, a partnership with the IT organization is ideal for faculty who are building their expertise and capacity as they consider larger-scale projects. Projects in the social sciences, humanities, and performing arts are often as compelling, complex, and large scale as those from the traditional fields.

Third, many applications entail interdisciplinary work; how we get partners together across disciplines is extraordinarily critical. A successful program will provide the programs, tools, and services that allow faculty to easily discover each other. That is how IT will have a major impact.

Fourth, we are starting to see major conversations — such as through Internet2's Net+ program — about how we identify and support the kinds of transformative software applications and tools that can really advance our research and teaching programs. Many of these specialized tools will come from the academy and national labs rather than industry for decades to come. How do we support and disseminate them?

Finally, the revolution is not about the cloud per se; it is about the new ecosystem, including local, campus, and community and vendor cloud services. Those who insist everything must stay on campus "in the data center" as well as those who announce "it's all going to the vendor cloud" speak prematurely; too much is at stake and too much invention is required to make all this work any single way. Well into the next decade, it's going to be a very strategic balance, and we're going to have to decide how to invest individually and collectively. We will experiment, fail here and there, and then innovate.

Conclusion

Because we find ourselves in a period of intense innovation, we must keep our students always in mind. Undergraduates will be the faculty of the future, and they will be driving the next generation of research programs and technologies. Getting them ready today is critically important.

I have emphasized global, interdisciplinary partnerships because of their importance to faculty. To support our faculty well, we must take a comprehensive approach and enable access to resources wherever they may be, even as doing so moves us outside our comfort zone. Faculty depend on us and our administration to provide these building blocks; only we can sort out challenges such as long-range networking and how to co-invest in shared facilities at scale (whether big data, HPC, visualization, or emerging technologies).

If we help our faculty succeed, they will do everything they can to support us, which in turn will allow us to do more for our faculty community. It is a great time to be leaders together in a great project that promises to transform university research. It has only just begun, but the revolution is here.

- This article is based on my EDUCAUSE Live Webinar from May 13, 2014, which contains additional case studies and visuals.

- Charles Rousseaux, "New 100 Gbps Network Will Keep America on Cutting Edge of Innovation," U.S. Department of Energy, November 15, 2011.

- Patrice Koehl, professor of computer science at University of California, Davis, collaborated with me and others on a major data sciences initiative in 2012–2013 and created this taxonomy and offered other valuable insights.

- Transfer throughput of the Worldwide LHC Computing Grid, CERN, on July 24, 2014.

- Large Synoptic Survey Telescope, LSST Brochure, revised January 2009.

- Governor Gary Herbert, 2012 Energy Summit, Utah.gov, accessed July 24, 2014.

- In fact, such a project is under way at University of Southern California, within the USC Shoah Foundation Institute and USC Digital Repository, in a partnership with Clemson University.

- Piyush Mehrotra, "Performance Evaluation of Amazon EC2 for NASA HPC Applications," presentation, 3rd Workshop on Science Cloud Computing, 2014.

- Stephen Shankland, "Supercomputing Simulation Employs 156,000 Amazon Processor Cores," CNET, November 12, 2013.

- Mark Thompson, personal communication, May 2014.

- At USC, codes are developed and tested first on our campus HPC systems, then tuned for the quantum computer. This gives us a great "test" environment that is broadly accessible.

- Ryan Gilmour, "Extremely tiny and incredibly far away," USC News, February 3, 2013.

- The audio portion of this video is Wolfgang Amadeus Mozart, Bassoon Concerto in B Flat Major, K. 191–I. Allegro, Skidmore College Orchestra.

- Basel Kayyali, David Knott, and Steve Van Kuiken, "The Big-Data Revolution in US Health Care: Accelerating Value and Innovation," McKinsey & Company, April 2013.

- Ibid.

- Graham Hughes, "How Big Is 'Big Data' in Healthcare?" blog, SAS, October 21, 2011.

© 2014 Peter M. Siegel. The text of this EDUCAUSE Review online article is licensed under the Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 license.